Build Data Pipeline

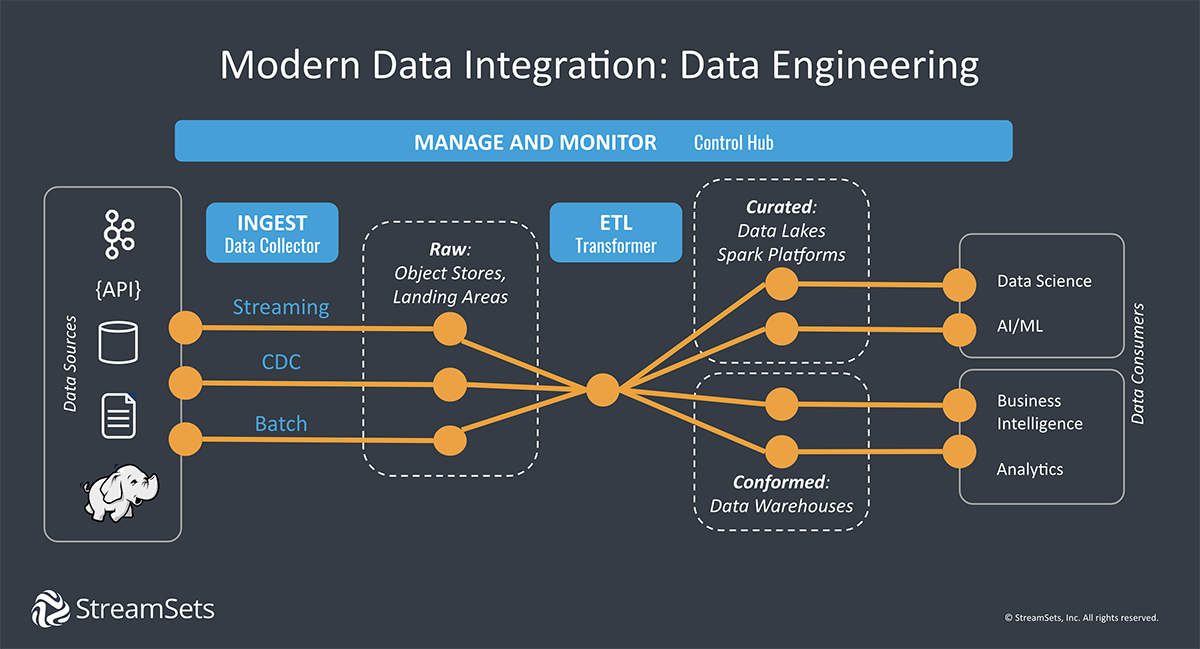

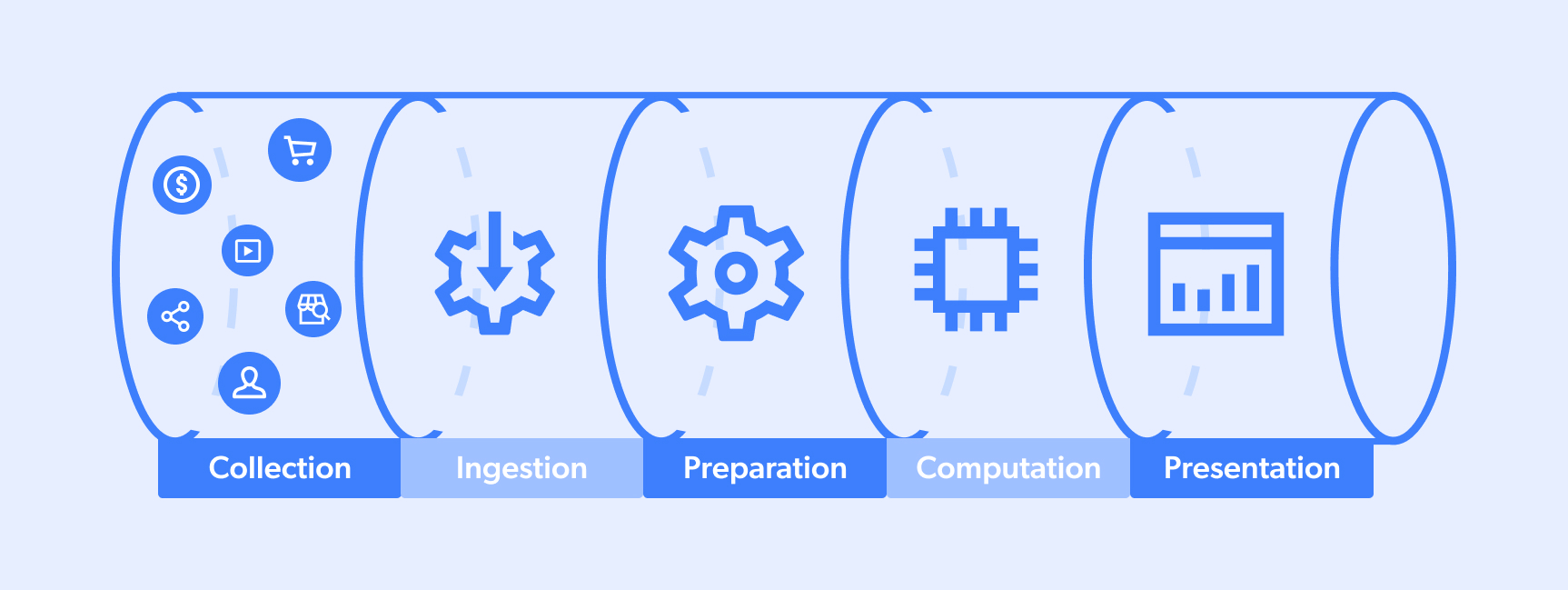

Build Data Pipeline - The source, where data is ingested; One key skill you'll need to master is building data pipelines. A data pipeline is simply a set of processes that move data from one place to another, often transforming it. What is a data pipeline? How to build a data pipeline from scratch. By following the steps outlined in. The hugging face engineers plan to tap the science cluster to generate datasets similar to those deepseek used to create r1. The processing action, where data is transformed into the. Get started building a data pipeline with data ingestion, data transformation, and model training. Data pipelines help with four key aspects of effective data management: Building an effective data pipeline involves a systematic approach encompassing several key stages: 60% should go to a filter using pearson correlation; And a well designed pipeline will meet use case. Data pipelines are a significant part of the big data domain,. A data pipeline architecture is typically composed of three key elements: What is a data pipeline? A data pipeline improves data management by consolidating and storing data from different sources. By following the steps outlined in. The hugging face engineers plan to tap the science cluster to generate datasets similar to those deepseek used to create r1. A devops pipeline is a set of automated processes that simplifies the developing process through the development, testing, and deployment phases of software. Data pipelines help with four key aspects of effective data management: The hugging face engineers plan to tap the science cluster to generate datasets similar to those deepseek used to create r1. In a data pipeline, data may be transformed and updated before it is stored in a data repository. How to build a data pipeline from scratch. One key. A data pipeline architecture is typically composed of three key elements: Data pipelines help with four key aspects of effective data management: One key skill you'll need to master is building data pipelines. To build a training pipeline, the team is soliciting. Split data using a 60/40 split. How to build a data pipeline from scratch. Get started building a data pipeline with data ingestion, data transformation, and model training. In a data pipeline, data may be transformed and updated before it is stored in a data repository. Volume, velocity, veracity, variety, and value. What is a data pipeline? How to build a data pipeline from scratch. A data pipeline architecture is typically composed of three key elements: A data pipeline is simply a set of processes that move data from one place to another, often transforming it. What is a data pipeline? Split data using a 60/40 split. This national map training video will demonstrate how to create and implement a point data abstraction library or pdal pipeline script that will generate derivative elevation. Split data using a 60/40 split. Creating a data pipeline for scraped data is essential for ensuring that the extracted data is cleaned, transformed, and stored in a usable format. Create a pearson correlation. And a well designed pipeline will meet use case. Create a pearson correlation feature selection using rating as a target column. Data pipelines are a significant part of the big data domain,. The hugging face engineers plan to tap the science cluster to generate datasets similar to those deepseek used to create r1. A data pipeline is simply a set. A data pipeline improves data management by consolidating and storing data from different sources. Data pipelines are a significant part of the big data domain,. Learn how matillion has helped companies leverage their data to drive productivity, profits, and innovation. Split data using a 60/40 split. By following the steps outlined in. Create a pearson correlation feature selection using rating as a target column. By following the steps outlined in. Data pipelines are a significant part of the big data domain,. The source, where data is ingested; Creating a data pipeline for scraped data is essential for ensuring that the extracted data is cleaned, transformed, and stored in a usable format. Split data using a 60/40 split. Creating a data pipeline for scraped data is essential for ensuring that the extracted data is cleaned, transformed, and stored in a usable format. To build a training pipeline, the team is soliciting. This national map training video will demonstrate how to create and implement a point data abstraction library or pdal pipeline script. To build a training pipeline, the team is soliciting. 40% should be used as test; Data pipelines are a significant part of the big data domain,. The hugging face engineers plan to tap the science cluster to generate datasets similar to those deepseek used to create r1. How to build a data pipeline from scratch. By following the steps outlined in. The hugging face engineers plan to tap the science cluster to generate datasets similar to those deepseek used to create r1. Create a pearson correlation feature selection using rating as a target column. A data pipeline is simply a set of processes that move data from one place to another, often transforming it. 60% should go to a filter using pearson correlation; A data pipeline architecture is typically composed of three key elements: One key skill you'll need to master is building data pipelines. Split data using a 60/40 split. And a well designed pipeline will meet use case. Building an effective data pipeline involves a systematic approach encompassing several key stages: Data pipelines are a significant part of the big data domain,. 40% should be used as test; This national map training video will demonstrate how to create and implement a point data abstraction library or pdal pipeline script that will generate derivative elevation. Creating a data pipeline for scraped data is essential for ensuring that the extracted data is cleaned, transformed, and stored in a usable format. What is a data pipeline? The processing action, where data is transformed into the.Building a Data Pipeline for Tracking Sporting Events Using AWS

How to Build a Scalable Big Data Analytics Pipeline by Nam Nguyen

Data Pipeline Types, Architecture, & Analysis

How To Build RealTime Data Pipelines The Guide Estuary

Smart Data Pipelines Architectures, Tools, Key Concepts StreamSets

How to build a data pipeline Blog Fivetran

How to build a scalable data analytics pipeline Artofit

What is data pipeline architecture examples and benefits

How to build a data pipeline Blog Fivetran

How To Create A Data Pipeline Automation Guide] Estuary

We Asked Hei And Mastery Logistics’ Lead Machine Learning Engineer Jessie Daubner About Which Tools And Technologies They Use To Build Data Pipelines And What Steps.

Good Data Pipeline Architecture Is Critical To Solving The 5 V’s Posed By Big Data:

Volume, Velocity, Veracity, Variety, And Value.

To Build A Training Pipeline, The Team Is Soliciting.

Related Post:

![How To Create A Data Pipeline Automation Guide] Estuary](https://estuary.dev/static/5b09985de4b79b84bf1a23d8cf2e0c85/ca677/03_Data_Pipeline_Automation_ETL_ELT_Pipelines_04270ee8d8.png)