Building A Data Pipeline

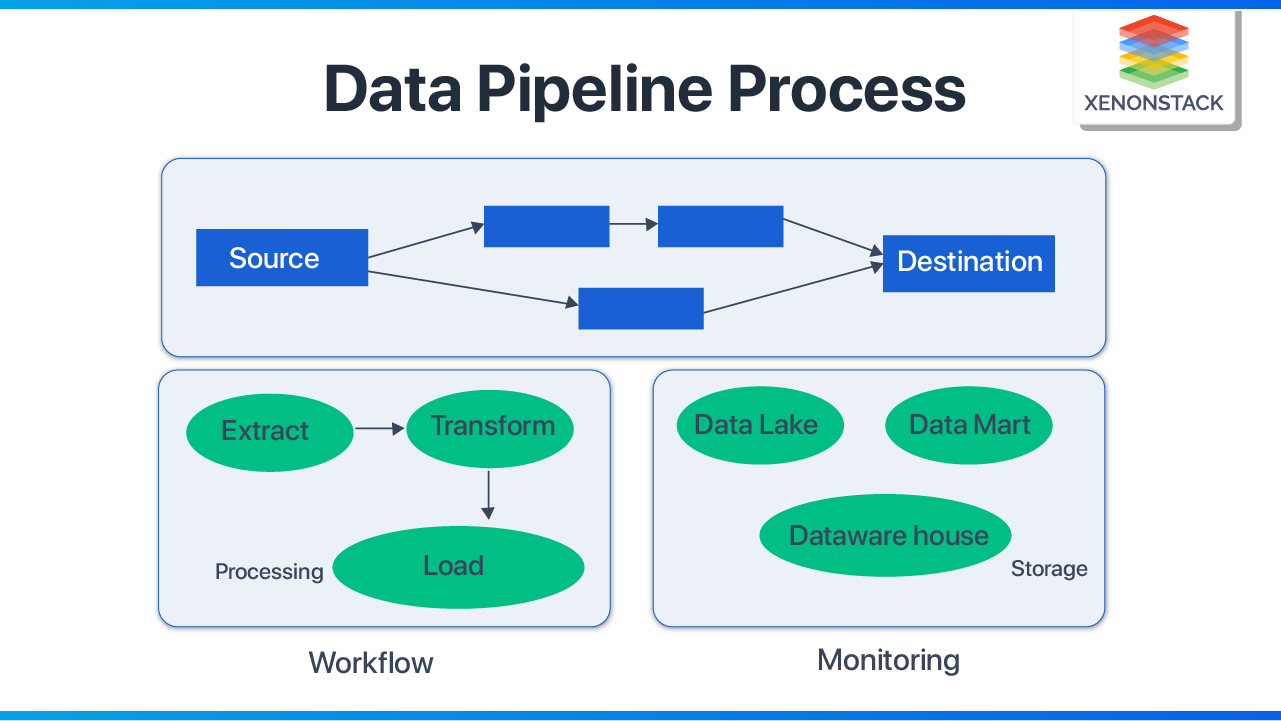

Building A Data Pipeline - Data pipelines are a set of aggregated components that ingest raw data from disparate sources and move it to a predetermined destination for storage and analysis (usually. Data pipelines are a significant part of the big data domain,. Imagine pulling data from multiple sources—some structured, others messy and. Establishing best practices for designing, building, and maintaining data pipelines is essential for managing complexity. The main idea here is to provide you with a jump start on building. Data consistency is a significant challenge. One key skill you'll need to master is building data pipelines. How to build a data pipeline from scratch. Building a rag pipeline isn’t a walk in the park. Parse the source data stream to convert into jsonl format using continuous query. Data pipelines are a set of aggregated components that ingest raw data from disparate sources and move it to a predetermined destination for storage and analysis (usually. Establishing best practices for designing, building, and maintaining data pipelines is essential for managing complexity. Data pipelines help with four key aspects of effective data management: Building an effective data pipeline involves a systematic approach encompassing several key stages: By following the steps outlined in this article, you can create a robust and efficient data pipeline. A data pipeline architecture is typically composed of three key elements: Data pipelines are a significant part of the big data domain,. This pipeline includes data loading, preprocessing, feature engineering, model training, and evaluation. A data pipeline improves data management by consolidating and storing data from different sources. The main idea here is to provide you with a jump start on building. By following the steps outlined in this article, you can create a robust and efficient data pipeline. Establishing best practices for designing, building, and maintaining data pipelines is essential for managing complexity. How to build a data pipeline from scratch. Get started building a data pipeline with data ingestion, data transformation, and model training. First we needed data, so we. What is a data pipeline? Building a data pipeline requires careful planning, design, and implementation. Data pipelines are a set of aggregated components that ingest raw data from disparate sources and move it to a predetermined destination for storage and analysis (usually. Data pipelines help with four key aspects of effective data management: Establishing best practices for designing, building, and. Parse the source data stream to convert into jsonl format using continuous query. Data pipelines help with four key aspects of effective data management: By following the steps outlined in this article, you can create a robust and efficient data pipeline. The main idea here is to provide you with a jump start on building. Building a data pipeline requires. By following the steps outlined in this article, you can create a robust and efficient data pipeline. The source, where data is ingested; Data pipelines help with four key aspects of effective data management: Configure the target to write the parsed data using filewriter. It also helps ensure error handling, data validation, and. The etl (extract, transform, load) pipeline is the backbone of data processing and analysis. Parse the source data stream to convert into jsonl format using continuous query. The processing action, where data is transformed into the. A data pipeline architecture is typically composed of three key elements: One key skill you'll need to master is building data pipelines. The source, where data is ingested; It also helps ensure error handling, data validation, and. Building a data pipeline requires careful planning, design, and implementation. In part 1, we built a real estate web scraper using selenium, extracting property listings from redfin, including prices, addresses, bed/bath counts, square footage, and geo. This article will get you. Data pipelines are a significant part of the big data domain,. The processing action, where data is transformed into the. It also helps ensure error handling, data validation, and. This article will get you. Building an effective data pipeline involves a systematic approach encompassing several key stages: In part 1, we built a real estate web scraper using selenium, extracting property listings from redfin, including prices, addresses, bed/bath counts, square footage, and geo. Data pipelines are a significant part of the big data domain,. Building an effective data pipeline involves a systematic approach encompassing several key stages: Building a data pipeline requires careful planning, design, and implementation.. In a data pipeline, data may be transformed and updated before it is stored in a data repository. One key skill you'll need to master is building data pipelines. This article will get you. Data pipelines help with four key aspects of effective data management: Parse the source data stream to convert into jsonl format using continuous query. A data pipeline architecture is typically composed of three key elements: The source, where data is ingested; Data consistency is a significant challenge. This article will get you. Parse the source data stream to convert into jsonl format using continuous query. It also helps ensure error handling, data validation, and. A data pipeline architecture is typically composed of three key elements: What is a data pipeline? How to build a data pipeline from scratch. Imagine pulling data from multiple sources—some structured, others messy and. Data pipelines are a set of aggregated components that ingest raw data from disparate sources and move it to a predetermined destination for storage and analysis (usually. A data pipeline improves data management by consolidating and storing data from different sources. Parse the source data stream to convert into jsonl format using continuous query. Data pipelines are a significant part of the big data domain,. Learn how matillion has helped companies leverage their data to drive productivity, profits, and innovation. Identify data sources and destinations: Establishing best practices for designing, building, and maintaining data pipelines is essential for managing complexity. In part 1, we built a real estate web scraper using selenium, extracting property listings from redfin, including prices, addresses, bed/bath counts, square footage, and geo. First we needed data, so we wrote a custom script that crawls through the github rest api (for example, you can analyze github data and build a simple dashboard for your. The processing action, where data is transformed into the. Building a data pipeline requires careful planning, design, and implementation.How to build a data pipeline Blog Fivetran

What is a Data Pipeline? Benefits and its Importance

How to build a scalable data analytics pipeline Artofit

How to Build a Scalable Big Data Analytics Pipeline by Nam Nguyen

How to Build a Data Pipeline? Here's a StepbyStep Guide Airbyte

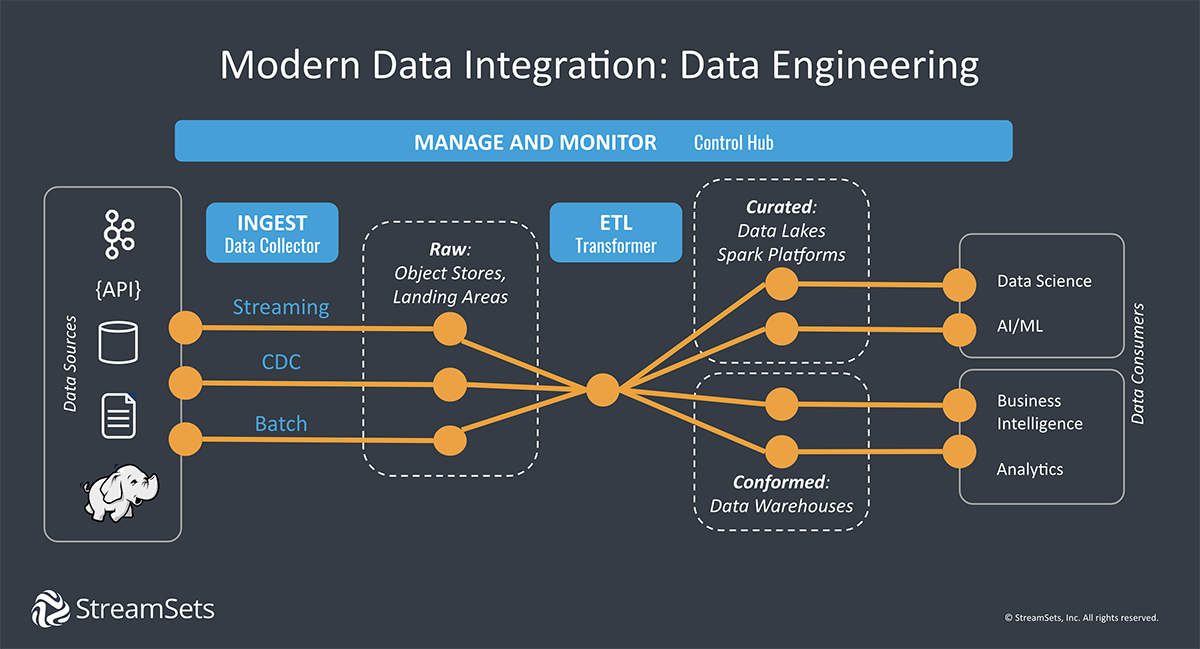

Smart Data Pipelines Architectures, Tools, Key Concepts StreamSets

Data Pipeline Types, Architecture, & Analysis

How to build a data pipeline Blog Fivetran

How to Build a Data Pipeline A Comprehensive Guide

The Perfect Guide to Building a Data Pipeline Architecture Crawlbase

The Source, Where Data Is Ingested;

This Pipeline Includes Data Loading, Preprocessing, Feature Engineering, Model Training, And Evaluation.

In A Data Pipeline, Data May Be Transformed And Updated Before It Is Stored In A Data Repository.

The Main Idea Here Is To Provide You With A Jump Start On Building.

Related Post: