Building Llm For Production

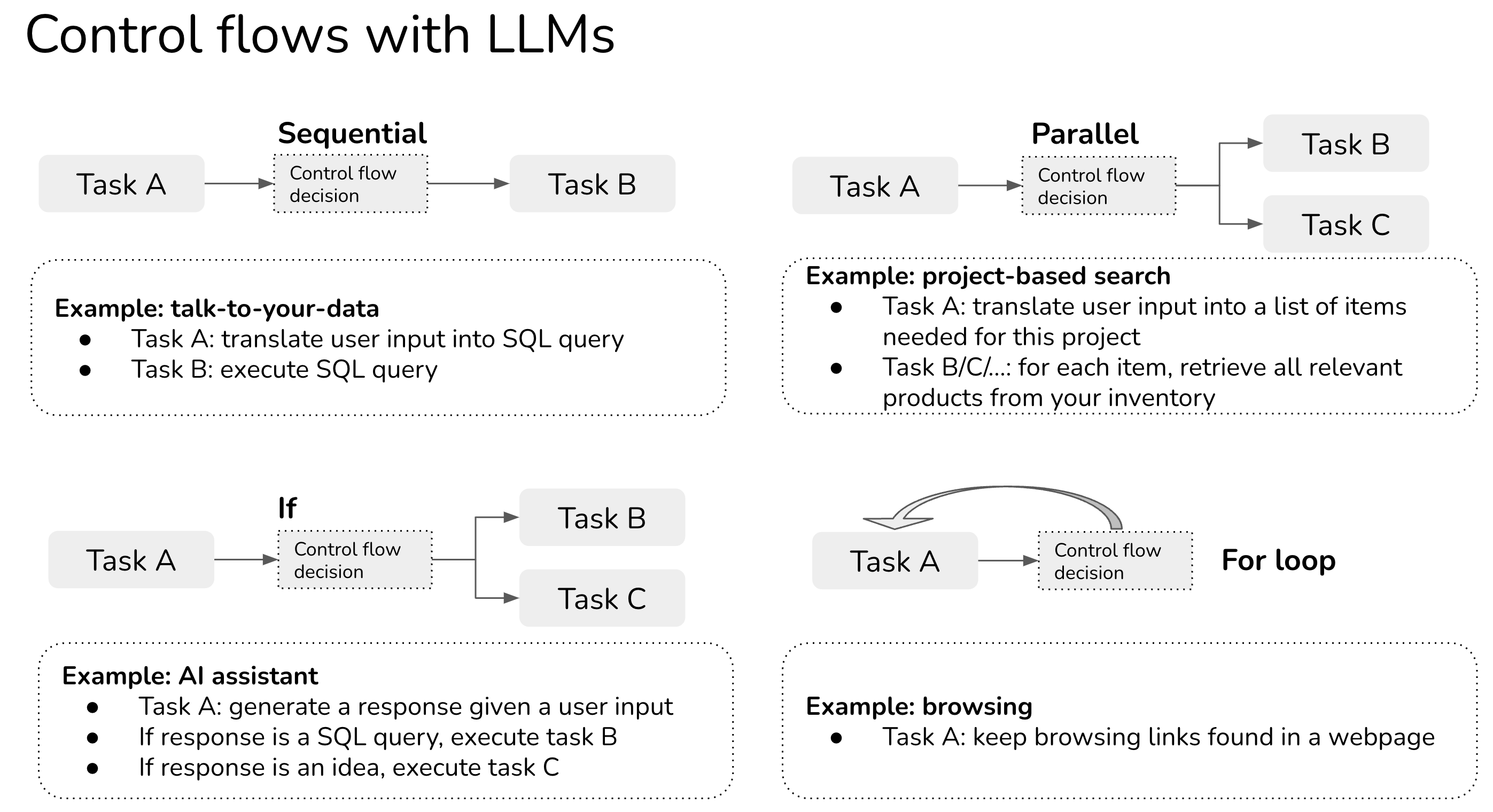

Building Llm For Production - The latest version of the book offers an improved structure, fresher insights, more. The latest version of the book offers an improved structure, fresher insights, more. For production, consider customizing the prompt further. Provide strategies for deploying llm applications into production, focusing on load balancing, managing cost and latency, and ensuring scalability. In this guide, learn how to build and deploy llm applications for production, focusing on effective strategies and best practices. Llm apps, short for large language model. This post demonstrates how to use. Researchers developed medusa, a framework to speed up llm inference by adding extra heads to predict multiple tokens simultaneously. This comprehensive tutorial series is designed to provide practical,. Through prompt tuning, evaluation, experimentation, orchestration, and llmops for automation, prompt flow significantly accelerates the journey from development to. For production, consider customizing the prompt further. Learn how successful companies build and improve llm features in production by following a proven approach. We are excited to announce the new and improved version of building llms for production. Provide strategies for deploying llm applications into production, focusing on load balancing, managing cost and latency, and ensuring scalability. Researchers developed medusa, a framework to speed up llm inference by adding extra heads to predict multiple tokens simultaneously. In this guide, learn how to build and deploy llm applications for production, focusing on effective strategies and best practices. Llms (large language models) have tremendous potential to enable new types of ai applications. Through prompt tuning, evaluation, experimentation, orchestration, and llmops for automation, prompt flow significantly accelerates the journey from development to. We are excited to announce the new and improved version of building llms for production. We are thrilled to introduce towards ai’s new book “ building llms for production: The latest version of the book offers an improved structure, fresher insights, more. The latest version of the book offers an improved structure, fresher insights, more. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. In this guide, learn how to build and. Through prompt tuning, evaluation, experimentation, orchestration, and llmops for automation, prompt flow significantly accelerates the journey from development to. The latest version of the book offers an improved structure, fresher insights, more. Llms (large language models) have tremendous potential to enable new types of ai applications. This comprehensive tutorial series is designed to provide practical,. We are excited to announce. Provide strategies for deploying llm applications into production, focusing on load balancing, managing cost and latency, and ensuring scalability. Llm apps, short for large language model. Llms (large language models) have tremendous potential to enable new types of ai applications. Researchers developed medusa, a framework to speed up llm inference by adding extra heads to predict multiple tokens simultaneously. In. To start a proof of concept (poc) or minimum viable product (mvp), most will connect to an llm available through an api. We are thrilled to introduce towards ai’s new book “ building llms for production: Provide strategies for deploying llm applications into production, focusing on load balancing, managing cost and latency, and ensuring scalability. Researchers developed medusa, a framework. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. We are thrilled to introduce towards ai’s new book “ building llms for production: The latest version of the book offers an improved structure, fresher insights, more. We are excited to announce the new. Through prompt tuning, evaluation, experimentation, orchestration, and llmops for automation, prompt flow significantly accelerates the journey from development to. The latest version of the book offers an improved structure, fresher insights, more. The latest version of the book offers an improved structure, fresher insights, more. We are thrilled to introduce towards ai’s new book “ building llms for production: Learn. Through prompt tuning, evaluation, experimentation, orchestration, and llmops for automation, prompt flow significantly accelerates the journey from development to. We are excited to announce the new and improved version of building llms for production. In this guide, learn how to build and deploy llm applications for production, focusing on effective strategies and best practices. In this article, we will delve. Researchers developed medusa, a framework to speed up llm inference by adding extra heads to predict multiple tokens simultaneously. Learn how successful companies build and improve llm features in production by following a proven approach. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering,. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. The latest version of the book offers an improved structure, fresher insights, more. Llm apps, short for large language model. The latest version of the book offers an improved structure, fresher insights, more. This. Provide strategies for deploying llm applications into production, focusing on load balancing, managing cost and latency, and ensuring scalability. We are thrilled to introduce towards ai’s new book “ building llms for production: This comprehensive tutorial series is designed to provide practical,. Through prompt tuning, evaluation, experimentation, orchestration, and llmops for automation, prompt flow significantly accelerates the journey from development. The latest version of the book offers an improved structure, fresher insights, more. This comprehensive tutorial series is designed to provide practical,. Researchers developed medusa, a framework to speed up llm inference by adding extra heads to predict multiple tokens simultaneously. We are excited to announce the new and improved version of building llms for production. For production, consider customizing the prompt further. In this guide, learn how to build and deploy llm applications for production, focusing on effective strategies and best practices. Provide strategies for deploying llm applications into production, focusing on load balancing, managing cost and latency, and ensuring scalability. To start a proof of concept (poc) or minimum viable product (mvp), most will connect to an llm available through an api. Through prompt tuning, evaluation, experimentation, orchestration, and llmops for automation, prompt flow significantly accelerates the journey from development to. Llm apps, short for large language model. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. The latest version of the book offers an improved structure, fresher insights, more. Llms (large language models) have tremendous potential to enable new types of ai applications.Building LLM applications for production

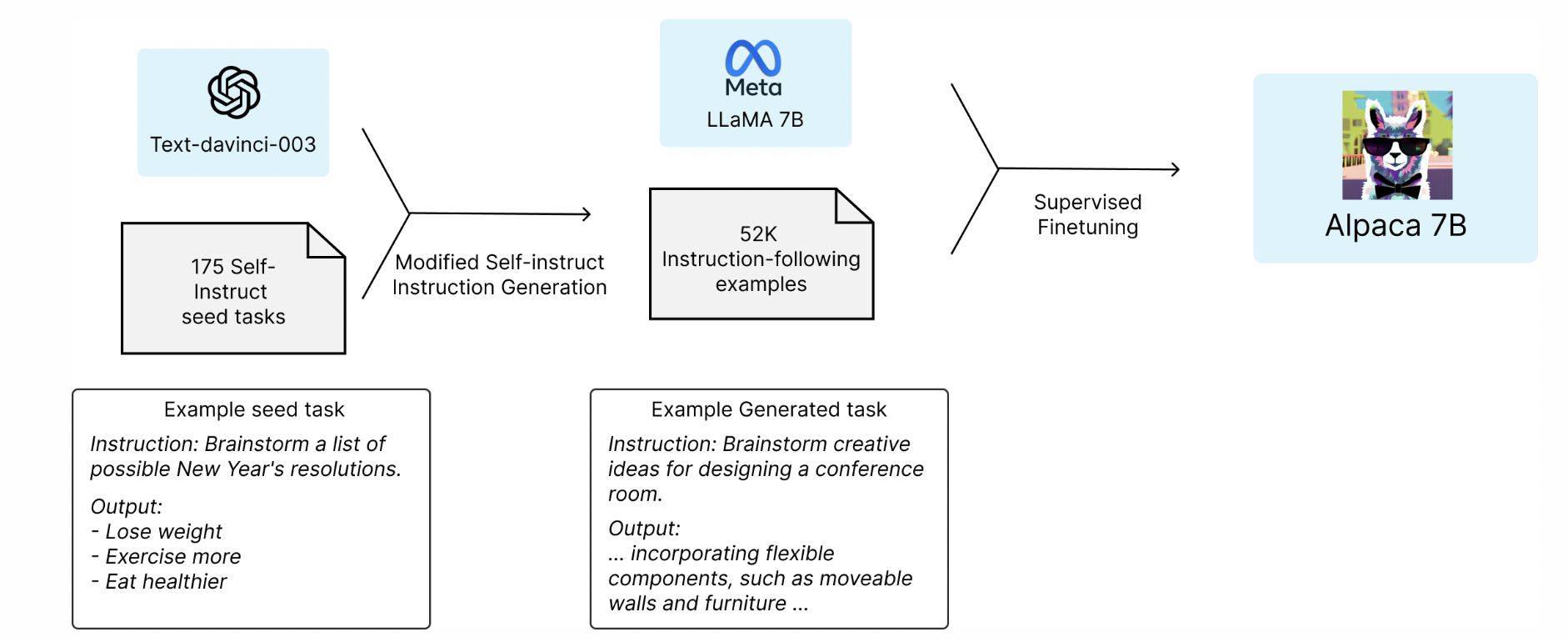

Practical Data Considerations for Building ProductionReady LLM

Building ProductionReady LLM Apps with LlamaIndex Document Metadata

LLM Spark Your Ultimate Dev Platform for Building ProductionReady LLM

Building Successful LLMPowered Products — FRANKI T

SymmetricalDataSecurity Building LLM Applications for Production

Building a production ready LLM application with BerriAI, PropelAuth

Building LLMs for Production Enhancing LLM Abilities and Reliability

Building LLM Applications For Production PDF

Building LLM Applications for Production // Chip Huyen // LLMs in Prod

Learn How Successful Companies Build And Improve Llm Features In Production By Following A Proven Approach.

We Are Thrilled To Introduce Towards Ai’s New Book “ Building Llms For Production:

We Are Excited To Announce The New And Improved Version Of Building Llms For Production.

This Post Demonstrates How To Use.

Related Post: