Building Llms For Production

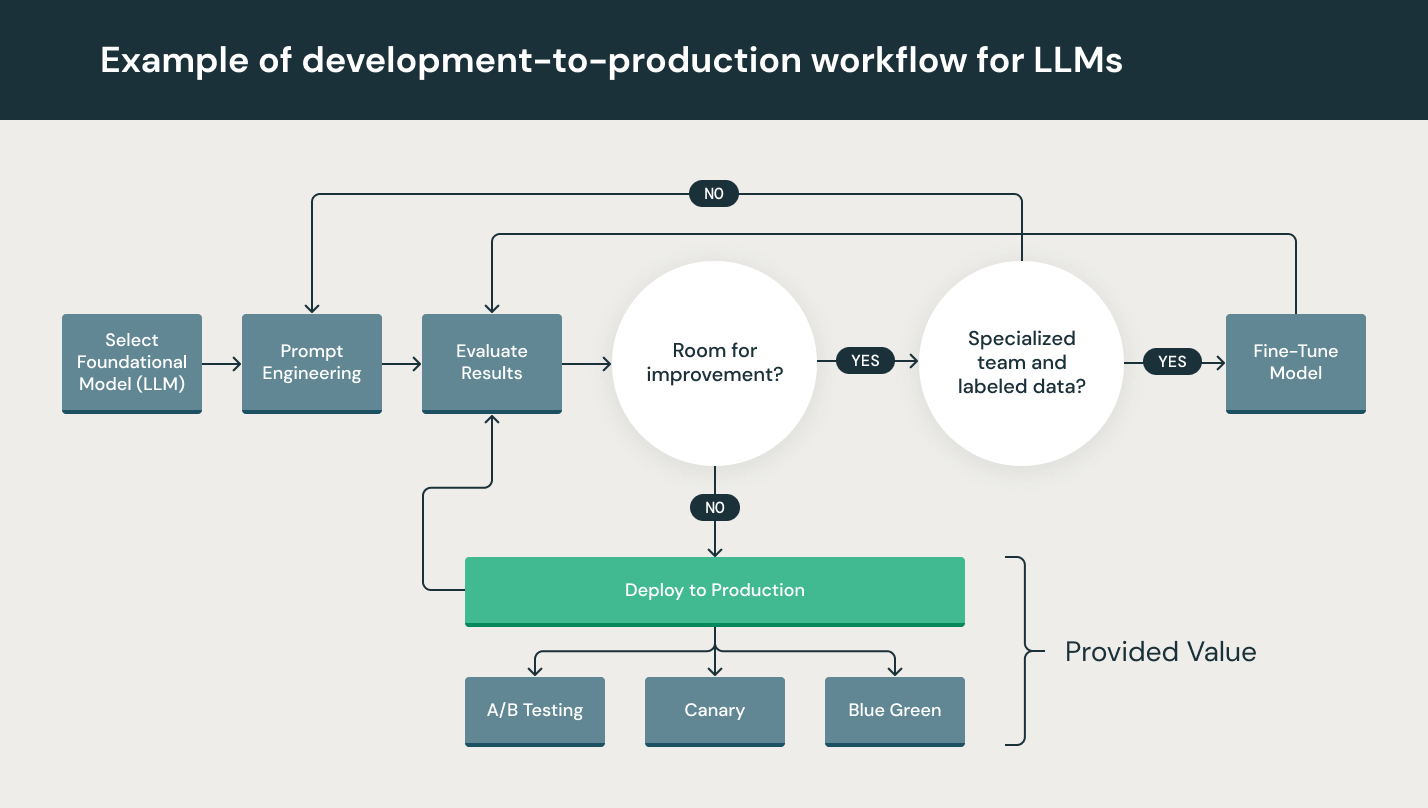

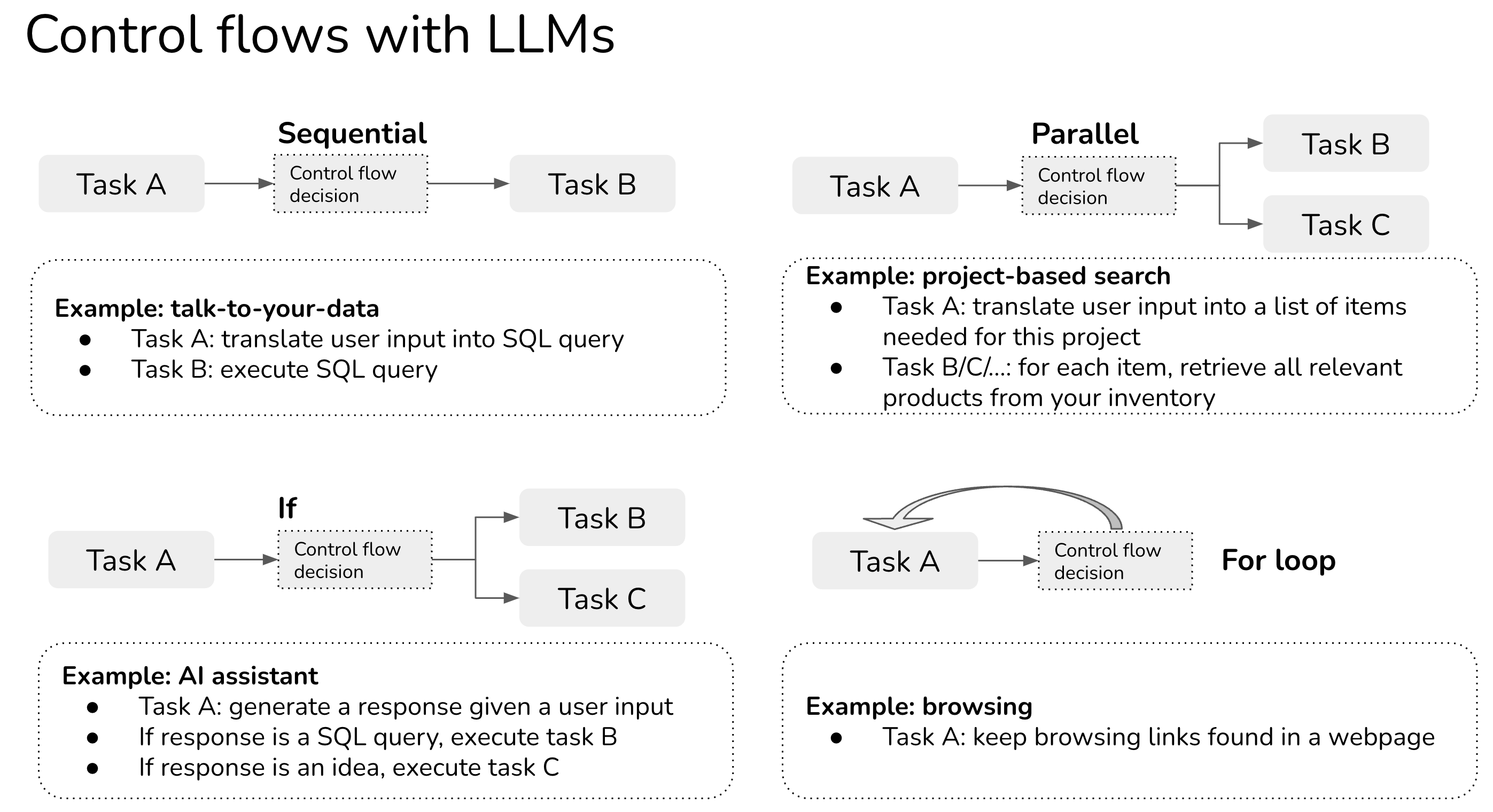

Building Llms For Production - Learn from experts how to build large language models (llms) for various applications, from prompting to deployment. The latest version of the book offers an improved structure, fresher insights, more. Start building and scaling llms tailored to your needs and see the transformative impact of ai in production first hand. The latest version of the book offers an improved structure, fresher insights, more. This would be hardly possible. With the rise of llms, language modeling has become an essential part of nlp. Langsmith is a comprehensive platform designed to support the development, deployment and monitoring of llm applications. Solve challenges in scaling, optimizing, and maintaining. We are thrilled to introduce towards ai’s new book “ building llms for production: Building llms for production serves as an excellent guide for applying large language models in practical settings. The book is notable for its straightforward explanations and numerous practical code examples, which help demystify complex concepts for readers. We are excited to announce the new and improved version of building llms for production. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. Learn from experts how to build large language models (llms) for various applications, from prompting to deployment. Transformer models help llms understand language context. O’reilly members experience books, live events, courses curated by job role, and more from o’reilly and nearly. Take llms from concept to production use with confidence. Solve challenges in scaling, optimizing, and maintaining. The latest version of the book offers an improved structure, fresher insights, more. Whether it’s customer engagement, operational. The use of tools and helps. Building llms for production introduces the foundational and emerging trends in natural language processing (nlp), primarily large language models (llms), providing insights into how these. The book is notable for its straightforward explanations and numerous practical code examples, which help demystify complex concepts for readers. We are excited to announce the new and improved. With the rapid advancements in artificial intelligence (ai) and natural language processing (nlp), large language models (llms) have become a critical area of study for. But before we get into practical examples, we will introduce two commonly used frameworks that are often. The book is notable for its straightforward explanations and numerous practical code examples, which help demystify complex concepts. F applying llms in practice. The latest version of the book offers an improved structure, fresher insights, more. Ci/cd pipelines should support testing, staging, and production environments, ensuring the model functions properly at each stage before real. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness,. This blog post covers the basics of llms, their. We have introduced and discussed the core idea behind rag throughout this book. Get building llms for production now with the o’reilly learning platform. F applying llms in practice. Whether it’s customer engagement, operational. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. But before we get into practical examples, we will introduce two commonly used frameworks that are often. With the rise of llms, language modeling has become an essential part of nlp. The book is. But before we get into practical examples, we will introduce two commonly used frameworks that are often. Learn from experts how to build large language models (llms) for various applications, from prompting to deployment. O’reilly members experience books, live events, courses curated by job role, and more from o’reilly and nearly. Start building and scaling llms tailored to your needs. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. Transformer models help llms understand language context. We are thrilled to introduce towards ai’s new book “ building llms for production: Take llms from concept to production use with confidence. Building llms for production. F applying llms in practice. Learn from experts how to build large language models (llms) for various applications, from prompting to deployment. We are thrilled to introduce towards ai’s new book “ building llms for production: The book is notable for its straightforward explanations and numerous practical code examples, which help demystify complex concepts for readers. Building llms for production. The latest version of the book offers an improved structure, fresher insights, more. O’reilly members experience books, live events, courses curated by job role, and more from o’reilly and nearly. Solve challenges in scaling, optimizing, and maintaining. It means learning the probability distribution of words within a language based on a large corpus. Building llms for production introduces the foundational. We are excited to announce the new and improved version of building llms for production. Transformer models help llms understand language context. With the rise of llms, language modeling has become an essential part of nlp. Building llms for production serves as an excellent guide for applying large language models in practical settings. Whether it’s customer engagement, operational. This would be hardly possible. The latest version of the book offers an improved structure, fresher insights, more. Learn how to use large language models (llms) for various natural language processing tasks in different industries. O’reilly members experience books, live events, courses curated by job role, and more from o’reilly and nearly. Building llms for production introduces the foundational and emerging trends in natural language processing (nlp), primarily large language models (llms), providing insights into how these. With the rapid advancements in artificial intelligence (ai) and natural language processing (nlp), large language models (llms) have become a critical area of study for. This blog post covers the basics of llms, their. Ci/cd pipelines should support testing, staging, and production environments, ensuring the model functions properly at each stage before real. From decisions such as which llm to use to how to optimize llm latency, the relevant tools and techniques are highlighted to help guide readers in their journey. Solve challenges in scaling, optimizing, and maintaining. We are excited to announce the new and improved version of building llms for production. Written by over 10 people on our team at towards ai and. Start building and scaling llms tailored to your needs and see the transformative impact of ai in production first hand. We are excited to announce the new and improved version of building llms for production. In this article, we will delve deeper into the best practices for deploying llms, considering factors such as importance of data, cost effectiveness, prompt engineering, fine. Understanding graph neural networks (gnns) and their application in retrieval.Practical Data Considerations for Building ProductionReady LLM

Building Production Ready LLMDriven Apps

Building LLM Applications for Production // Chip Huyen // LLMs in Prod

Building LLMs for Production Book

Building LLM Products Panel // LLMs in Production Conference part 2

Building LLM applications for production

Deploy LLMs in Production LLM Deployment Challenges

LLMOps Operationalizing Large Language Models Databricks

SymmetricalDataSecurity Building LLM Applications for Production

Building LLMs for Production Enhancing LLM Abilities and Reliability

“Building Llms For Production” Explores Various Rag Techniques And How They Can Be Implemented In Production Environments.

Whether It’s Customer Engagement, Operational.

It Explores Various Methods To Adapt Foundational Llms To Specific Use Cases With Enhanced Accuracy, Reliability, And Scalability.

Langsmith Is A Comprehensive Platform Designed To Support The Development, Deployment And Monitoring Of Llm Applications.

Related Post: