How To Build Data Pipeline

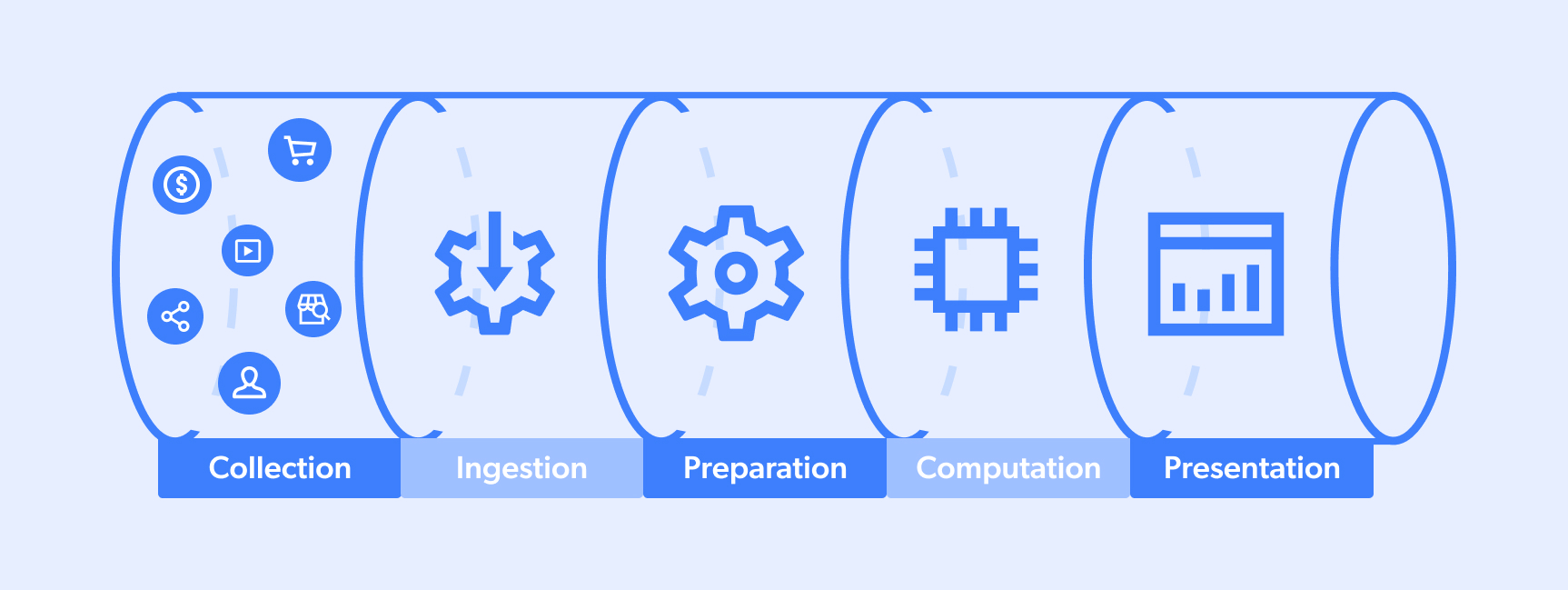

How To Build Data Pipeline - A data pipeline is simply a set of processes that move data from one place to another, often transforming it. A texas utility company this week announced an agreement to pipe an additional 1.5 billion cubic feet of permian basin gas to the port arthur industrial corridor. Identify data sources and destinations: Building a data pipeline requires careful planning, design, and implementation. Get started building a data pipeline with data ingestion, data transformation, and model training. The processing action, where data is transformed into the. Building an effective data pipeline involves a systematic approach encompassing several key stages: Data pipelines improve developer productivity by offering structured, reusable frameworks for data ingestion, transformation, and delivery. A data pipeline is a systematic sequence of components designed to automate the extraction, organization, transfer, transformation, and processing of data from one or more sources to a designated destination. A data pipeline architecture is typically composed of three key elements: Get started building a data pipeline with data ingestion, data transformation, and model training. Good data pipeline architecture is critical to solving the 5 v’s posed by big data: One key skill you'll need to master is building data pipelines. The source, where data is ingested; Building a data pipeline requires careful planning, design, and implementation. Provide specific products and services to you, such as portfolio management or data aggregation. A data pipeline is a system that moves data from one place to another. A data pipeline is simply a set of processes that move data from one place to another, often transforming it. Building an effective data pipeline involves a systematic approach encompassing several key stages: Develop and improve features of our offerings. Provide specific products and services to you, such as portfolio management or data aggregation. The source, where data is ingested; Building a data pipeline requires careful planning, design, and implementation. To do this, try having regular security reviews and. First we needed data, so we wrote a custom script that crawls through the github rest api (for example, you can. A data pipeline is simply a set of processes that move data from one place to another, often transforming it. Volume, velocity, veracity, variety, and value. A data pipeline improves data management by consolidating and storing data from different sources. One key skill you'll need to master is building data pipelines. Before diving in, get clear on what you. Building an effective data pipeline involves a systematic approach encompassing several key stages: A data pipeline is a systematic sequence of components designed to automate the extraction, organization, transfer, transformation, and processing of data from one or more sources to a designated destination. By following the steps outlined in this article, you can create a robust and efficient data pipeline.. Good data pipeline architecture is critical to solving the 5 v’s posed by big data: A texas utility company this week announced an agreement to pipe an additional 1.5 billion cubic feet of permian basin gas to the port arthur industrial corridor. In a data pipeline, data may be transformed and updated before it is stored in a data repository.. Dmitriy rudakov, director of solutions architecture at striim, describes it as “a program that moves data. Before diving in, get clear on what you. In a data pipeline, data may be transformed and updated before it is stored in a data repository. Building an effective data pipeline involves a systematic approach encompassing several key stages: Get started building a data. Building an effective data pipeline involves a systematic approach encompassing several key stages: Get started building a data pipeline with data ingestion, data transformation, and model training. By following the steps outlined in this article, you can create a robust and efficient data pipeline. To do this, try having regular security reviews and. For those who don’t know it, a. The source, where data is ingested; By following the steps outlined in this article, you can create a robust and efficient data pipeline. A data pipeline architecture is typically composed of three key elements: Explore how to build a data pipeline in 6 steps, from design to deployment, and learn a new framework to simplify the process. Check that your. First we needed data, so we wrote a custom script that crawls through the github rest api (for example, you can analyze github data and build a simple dashboard for your. Data pipelines improve developer productivity by offering structured, reusable frameworks for data ingestion, transformation, and delivery. Building a data pipeline requires careful planning, design, and implementation. What is a. And a well designed pipeline will meet use. Provide specific products and services to you, such as portfolio management or data aggregation. Volume, velocity, veracity, variety, and value. For those who don’t know it, a data pipeline is a set of actions that extract data (or directly analytics and visualization) from various sources. Data pipelines improve developer productivity by offering. Develop and improve features of our offerings. Building an effective data pipeline involves a systematic approach encompassing several key stages: A data pipeline is simply a set of processes that move data from one place to another, often transforming it. Volume, velocity, veracity, variety, and value. In a data pipeline, data may be transformed and updated before it is stored in a data repository. Identify data sources and destinations: Data pipelines improve developer productivity by offering structured, reusable frameworks for data ingestion, transformation, and delivery. The processing action, where data is transformed into the. Building a data pipeline requires careful planning, design, and implementation. The source, where data is ingested; By following the steps outlined in this article, you can create a robust and efficient data pipeline. Get started building a data pipeline with data ingestion, data transformation, and model training. It ensures data is collected, processed, and sent to where it is needed in an organized way. Good data pipeline architecture is critical to solving the 5 v’s posed by big data: Data pipelines help with four key aspects of effective data management: For those who don’t know it, a data pipeline is a set of actions that extract data (or directly analytics and visualization) from various sources.How to build a data pipeline Blog Fivetran

How to build a data pipeline Blog Fivetran

How To Create A Data Pipeline Automation Guide] Estuary

How to Build a Scalable Big Data Analytics Pipeline by Nam Nguyen

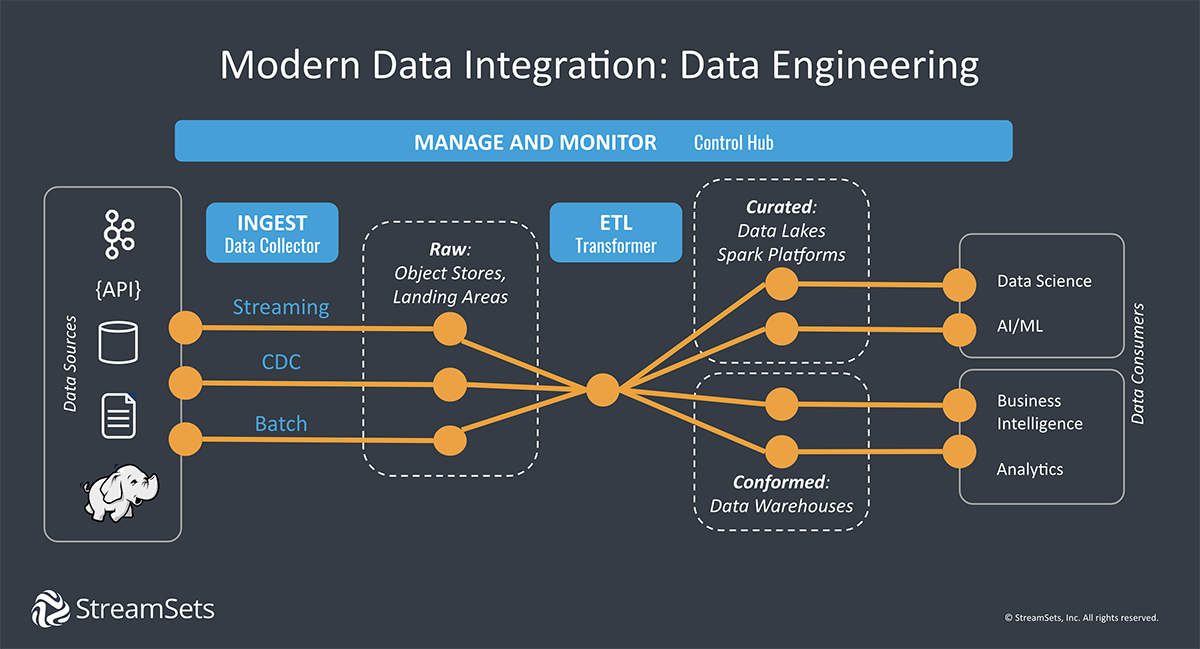

Smart Data Pipelines Architectures, Tools, Key Concepts StreamSets

Data Pipeline for AI and LLM Projects Build vs Buy Approach

Data Pipeline Types, Architecture, & Analysis

What is data pipeline architecture examples and benefits

How to build a data pipeline Blog Fivetran

Dmitriy Rudakov, Director Of Solutions Architecture At Striim, Describes It As “A Program That Moves Data.

A Data Pipeline Improves Data Management By Consolidating And Storing Data From Different Sources.

A Data Pipeline Is A System That Moves Data From One Place To Another.

Provide Specific Products And Services To You, Such As Portfolio Management Or Data Aggregation.

Related Post:

![How To Create A Data Pipeline Automation Guide] Estuary](https://estuary.dev/static/5b09985de4b79b84bf1a23d8cf2e0c85/ca677/03_Data_Pipeline_Automation_ETL_ELT_Pipelines_04270ee8d8.png)

.png)