How To Build Data Pipelines

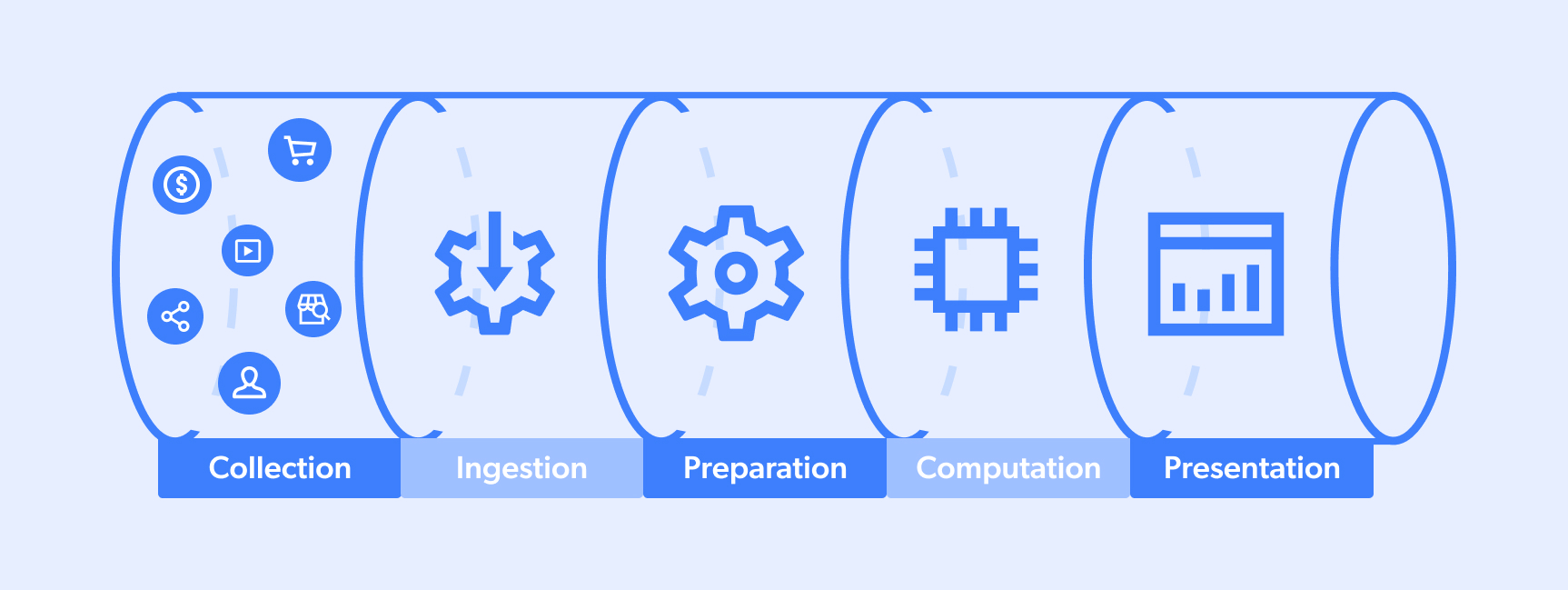

How To Build Data Pipelines - To do this, try having regular security reviews and. A data pipeline is a systematic sequence of components designed to automate the extraction, organization, transfer, transformation, and processing of data from one or more sources to a designated destination. Data pipelines are a set of aggregated components that ingest raw data from disparate sources and move it to a predetermined destination for storage and analysis (usually. Data pipelines are the backbone of modern data processing. Data pipelines help with four key aspects of effective data management: Join 69m+ learnersimprove your skillssubscribe to learningadvance your career Data pipelines improve developer productivity by offering structured, reusable frameworks for data ingestion, transformation, and delivery. A data pipeline improves data management by consolidating and storing data from different sources. Each step is pivotal in the overall. This comprehensive guide explores what data. Data pipelines play a crucial role in this process by facilitating the seamless data flow from acquisition to storage and analysis. Data pipelines are a set of aggregated components that ingest raw data from disparate sources and move it to a predetermined destination for storage and analysis (usually. In a data pipeline, data may be transformed and updated before it is stored in a data repository. Each step is pivotal in the overall. Dlt is built for powerful simplicity, so you can perform robust etl with just a few lines of code. Data pipelines facilitate a more seamless and effective integration process, enabling organizations to achieve a comprehensive and unified view of their data landscape. One approach that can mitigate the problem discussed before is to make your data pipeline flexible enough to take input parameters such as a start date from which you want to. Check that your pipeline meets regulatory requirements and industry standards by protecting sensitive data and maintaining compliance. First we needed data, so we wrote a custom script that crawls through the github rest api (for example, you can analyze github data and build a simple dashboard for your. A data pipeline improves data management by consolidating and storing data from different sources. Join 69m+ learnersimprove your skillssubscribe to learningadvance your career One approach that can mitigate the problem discussed before is to make your data pipeline flexible enough to take input parameters such as a start date from which you want to. Check that your pipeline meets regulatory requirements and industry standards by protecting sensitive data and maintaining compliance. Data pipelines improve. How to build a data pipeline from scratch. This comprehensive guide explores what data. Join 69m+ learnersimprove your skillssubscribe to learningadvance your career The etl (extract, transform, load) pipeline is the backbone of data processing and analysis. Building and operating data pipelines can be hard — but it doesn’t have to be. Dlt is built for powerful simplicity, so you can perform robust etl with just a few lines of code. Once data is scraped, it needs cleaning before. Data pipelines play a crucial role in this process by facilitating the seamless data flow from acquisition to storage and analysis. How to build a data pipeline from scratch. Check that your pipeline. Each step is pivotal in the overall. One approach that can mitigate the problem discussed before is to make your data pipeline flexible enough to take input parameters such as a start date from which you want to. First we needed data, so we wrote a custom script that crawls through the github rest api (for example, you can analyze. The etl (extract, transform, load) pipeline is the backbone of data processing and analysis. A data pipeline is a systematic sequence of components designed to automate the extraction, organization, transfer, transformation, and processing of data from one or more sources to a designated destination. Data pipelines facilitate a more seamless and effective integration process, enabling organizations to achieve a comprehensive. In a data pipeline, data may be transformed and updated before it is stored in a data repository. Get started building a data pipeline with data ingestion, data transformation, and model training. Dmitriy rudakov, director of solutions architecture at striim, describes it as “a program that moves data. Data pipelines facilitate a more seamless and effective integration process, enabling organizations. Check that your pipeline meets regulatory requirements and industry standards by protecting sensitive data and maintaining compliance. How to build a data pipeline from scratch. Dlt is built for powerful simplicity, so you can perform robust etl with just a few lines of code. They enable businesses to collect, process, and analyze data from. One approach that can mitigate the. Data pipelines play a crucial role in this process by facilitating the seamless data flow from acquisition to storage and analysis. Building and operating data pipelines can be hard — but it doesn’t have to be. Data pipelines improve developer productivity by offering structured, reusable frameworks for data ingestion, transformation, and delivery. How to build a data pipeline from scratch.. Configure the target to write the parsed data using filewriter. Get started building a data pipeline with data ingestion, data transformation, and model training. To do this, try having regular security reviews and. Data pipelines help with four key aspects of effective data management: In a data pipeline, data may be transformed and updated before it is stored in a. They enable businesses to collect, process, and analyze data from. Data pipelines play a crucial role in this process by facilitating the seamless data flow from acquisition to storage and analysis. Dmitriy rudakov, director of solutions architecture at striim, describes it as “a program that moves data. Once data is scraped, it needs cleaning before. First we needed data, so. What is a data pipeline? Building and operating data pipelines can be hard — but it doesn’t have to be. Parse the source data stream to convert into jsonl format using continuous query. Data pipelines are a set of aggregated components that ingest raw data from disparate sources and move it to a predetermined destination for storage and analysis (usually. To do this, try having regular security reviews and. A data pipeline improves data management by consolidating and storing data from different sources. 300, } data cleaning and processing with pandas. Get started building a data pipeline with data ingestion, data transformation, and model training. Data pipelines help with four key aspects of effective data management: Data pipelines improve developer productivity by offering structured, reusable frameworks for data ingestion, transformation, and delivery. Configure the target to write the parsed data using filewriter. In a data pipeline, data may be transformed and updated before it is stored in a data repository. Once data is scraped, it needs cleaning before. Join 69m+ learnersimprove your skillssubscribe to learningadvance your career One approach that can mitigate the problem discussed before is to make your data pipeline flexible enough to take input parameters such as a start date from which you want to. Data pipelines play a crucial role in this process by facilitating the seamless data flow from acquisition to storage and analysis.How to build a scalable data analytics pipeline Artofit

How to build a data pipeline Blog Fivetran

Learn to Build Data Pipelines From Scratch to Scale

How to build a data pipeline Blog Fivetran

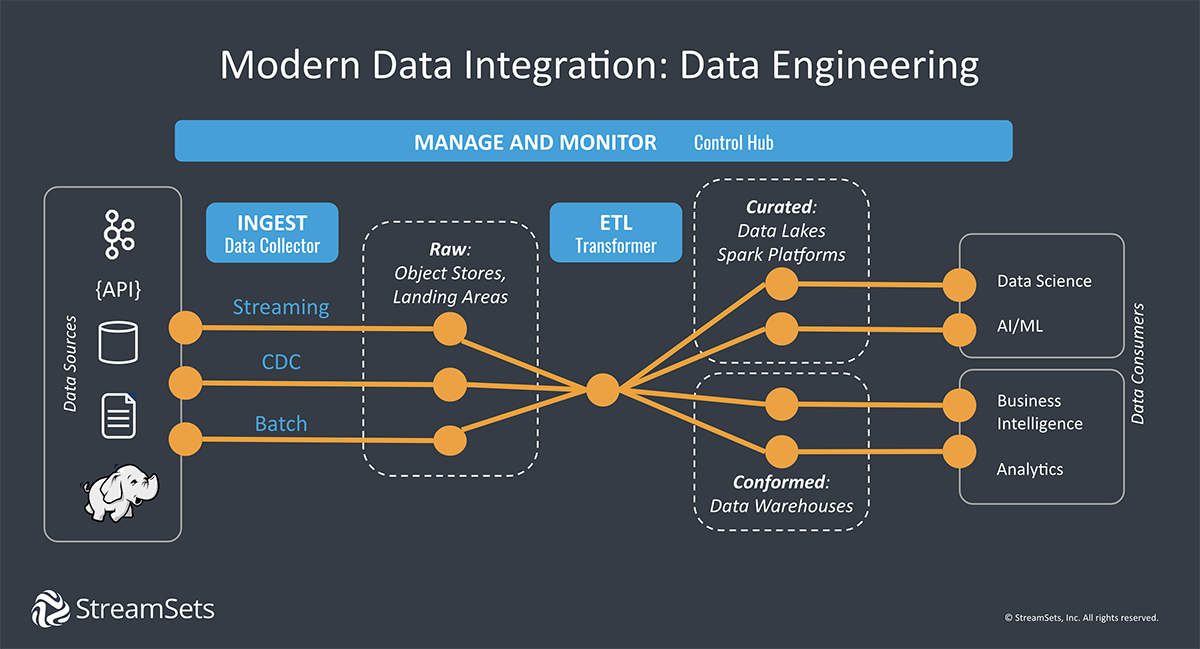

Smart Data Pipelines Architectures, Tools, Key Concepts StreamSets

Data Pipeline Types, Architecture, & Analysis

How To Create A Data Pipeline Automation Guide] Estuary

What is data pipeline architecture examples and benefits

A Guide to Data Pipelines (And How to Design One From Scratch) Striim

How to Build a Scalable Big Data Analytics Pipeline by Nam Nguyen

This Comprehensive Guide Explores What Data.

The Etl (Extract, Transform, Load) Pipeline Is The Backbone Of Data Processing And Analysis.

Data Pipelines Are The Backbone Of Modern Data Processing.

Each Step Is Pivotal In The Overall.

Related Post:

.png)

![How To Create A Data Pipeline Automation Guide] Estuary](https://estuary.dev/static/5b09985de4b79b84bf1a23d8cf2e0c85/ca677/03_Data_Pipeline_Automation_ETL_ELT_Pipelines_04270ee8d8.png)