How To Build Etl Pipeline

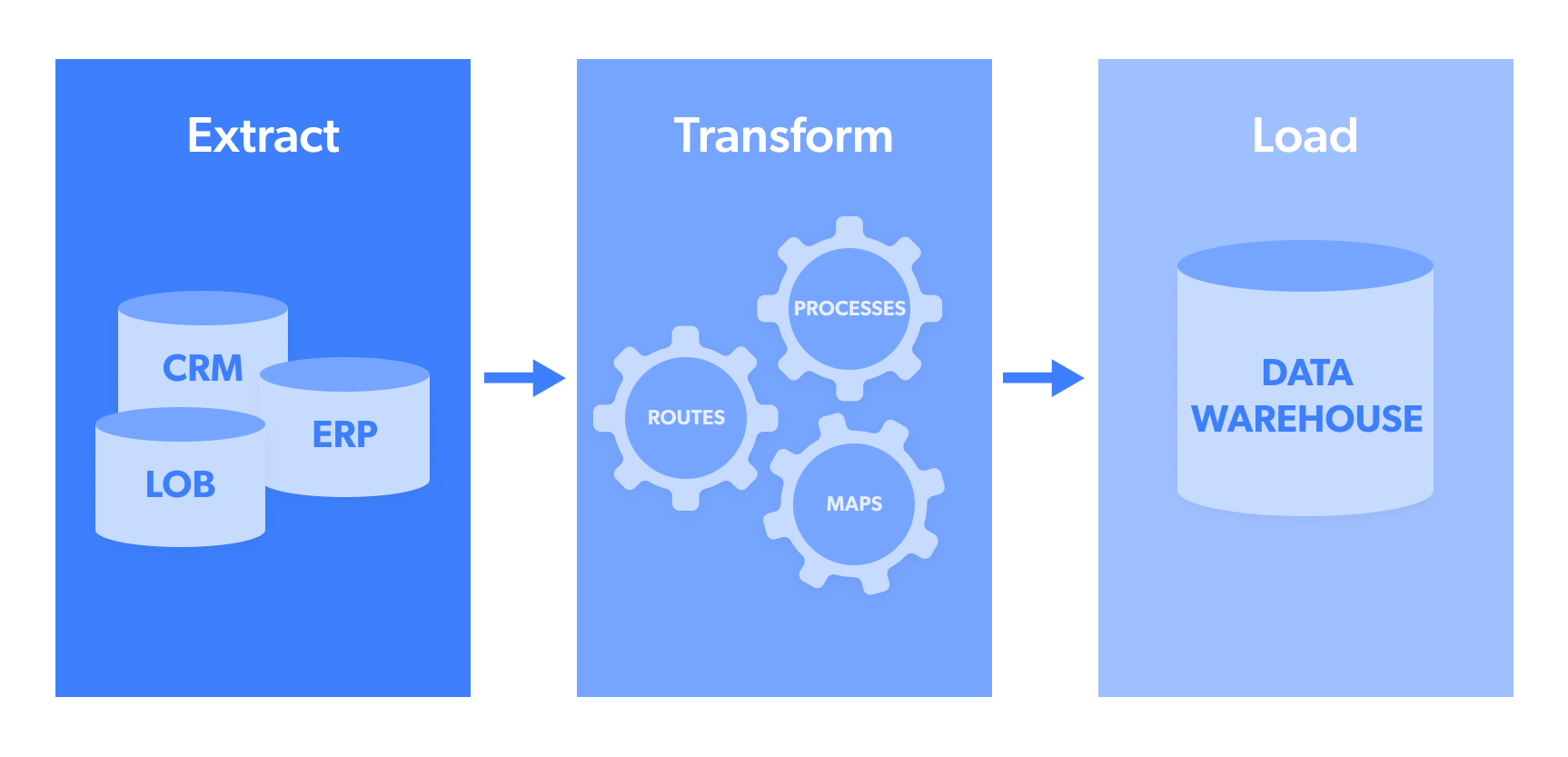

How To Build Etl Pipeline - Building a successful etl pipeline requires careful planning and the right set of tools. Here are the key steps to consider: We’ll start by laying the groundwork with an. Identify the sources you need to extract. Master the basics of extracting, transforming, and loading data with apache airflow. A unified pipeline that includes an integration layer (handling ingestion from different sources and filtering), a core processing. An etl (data extraction, transformation, loading) pipeline is a set of processes used to extract, transform, and load data from a source to a. In this blog, we will dive into the implementation of a robust etl pipeline using python, a powerful and versatile programming language that offers an array of libraries and. An etl pipeline automates the process of extracting data from various. In this article, we’ll walk you through the steps to create a robust etl pipeline using amazon data api and aws glue. Master the basics of extracting, transforming, and loading data with apache airflow. 6 differences explained & how to choose. The etl (extract, transform, load) pipeline is the backbone of data processing and analysis. Building an etl pipeline is crucial for organizations looking to effectively manage and analyze their data. An etl pipeline automates the process of extracting data from various. An etl (data extraction, transformation, loading) pipeline is a set of processes used to extract, transform, and load data from a source to a. A common etl pipeline with an integration layer. In this post, we’ll walk through the process of building a robust etl (extract, transform, load) pipeline using apache airflow, google cloud storage (gcs), bigquery, and. We’ll start by laying the groundwork with an. A unified pipeline that includes an integration layer (handling ingestion from different sources and filtering), a core processing. Welcome to the world of etl pipelines using apache airflow. Building an etl pipeline is crucial for organizations looking to effectively manage and analyze their data. In this tutorial, we will focus on. An etl (data extraction, transformation, loading) pipeline is a set of processes used to extract, transform, and load data from a source to a. What is a. An etl (data extraction, transformation, loading) pipeline is a set of processes used to extract, transform, and load data from a source to a. Here's a guide to get you started: 6 differences explained & how to choose. Building an etl pipeline using python is a powerful way to efficiently manage data processing tasks. Building a successful etl pipeline requires. Here are the key steps to consider: This article provides an insightful exploration of data management, focusing on the distinctions between. Building a successful etl pipeline requires careful planning and the right set of tools. The etl (extract, transform, load) pipeline is the backbone of data processing and analysis. In this article, we’ll walk you through the steps to create. An etl pipeline automates the process of extracting data from various. Building a successful etl pipeline requires careful planning and the right set of tools. Welcome to the world of etl pipelines using apache airflow. Identify the sources you need to extract. What is a etl data pipeline? Building an etl pipeline is crucial for organizations looking to effectively manage and analyze their data. Building an etl pipeline using python is a powerful way to efficiently manage data processing tasks. Building a successful etl pipeline requires careful planning and the right set of tools. The etl (extract, transform, load) pipeline is the backbone of data processing and analysis.. Building a successful etl pipeline requires careful planning and the right set of tools. An etl pipeline automates the process of extracting data from various. 6 differences explained & how to choose. The etl (extract, transform, load) pipeline is the backbone of data processing and analysis. Building an etl pipeline is crucial for organizations looking to effectively manage and analyze. Building a successful etl pipeline requires careful planning and the right set of tools. In this blog, we will dive into the implementation of a robust etl pipeline using python, a powerful and versatile programming language that offers an array of libraries and. We’ll start by laying the groundwork with an. An etl (data extraction, transformation, loading) pipeline is a. A common etl pipeline with an integration layer. The etl (extract, transform, load) pipeline is the backbone of data processing and analysis. An etl pipeline automates the process of extracting data from various. Discussing how to build an etl pipeline for database migration using sql server integration services (ssis) in visual studio 2019. Here's a guide to get you started: 6 differences explained & how to choose. Here's a guide to get you started: Discussing how to build an etl pipeline for database migration using sql server integration services (ssis) in visual studio 2019. Here are the key steps to consider: What is a etl data pipeline? Conclusion on etl pipeline orchestration. 6 differences explained & how to choose. Here are the key steps to consider: Building an etl pipeline using python is a powerful way to efficiently manage data processing tasks. How to build an etl pipeline. Master the basics of extracting, transforming, and loading data with apache airflow. Building a successful etl pipeline requires careful planning and the right set of tools. Conclusion on etl pipeline orchestration. A common etl pipeline with an integration layer. Here's a guide to get you started: Here are the key steps to consider: This article provides an insightful exploration of data management, focusing on the distinctions between. In this blog, we will dive into the implementation of a robust etl pipeline using python, a powerful and versatile programming language that offers an array of libraries and. In this tutorial, we will focus on. In this post, we’ll walk through the process of building a robust etl (extract, transform, load) pipeline using apache airflow, google cloud storage (gcs), bigquery, and. Identify the sources you need to extract. An etl pipeline automates the process of extracting data from various. Building an etl pipeline using python is a powerful way to efficiently manage data processing tasks. We’ll start by laying the groundwork with an. What is a etl data pipeline? In this article, we’ll walk you through the steps to create a robust etl pipeline using amazon data api and aws glue.Easily build ETL Pipeline using Python and Airflow The Workfall Blog

Building a Scalable ETL Pipeline with AWS S3, RDS, and PySpark A Step

How to Build an ETL Pipeline 7 Step Guide w/ Batch Processing

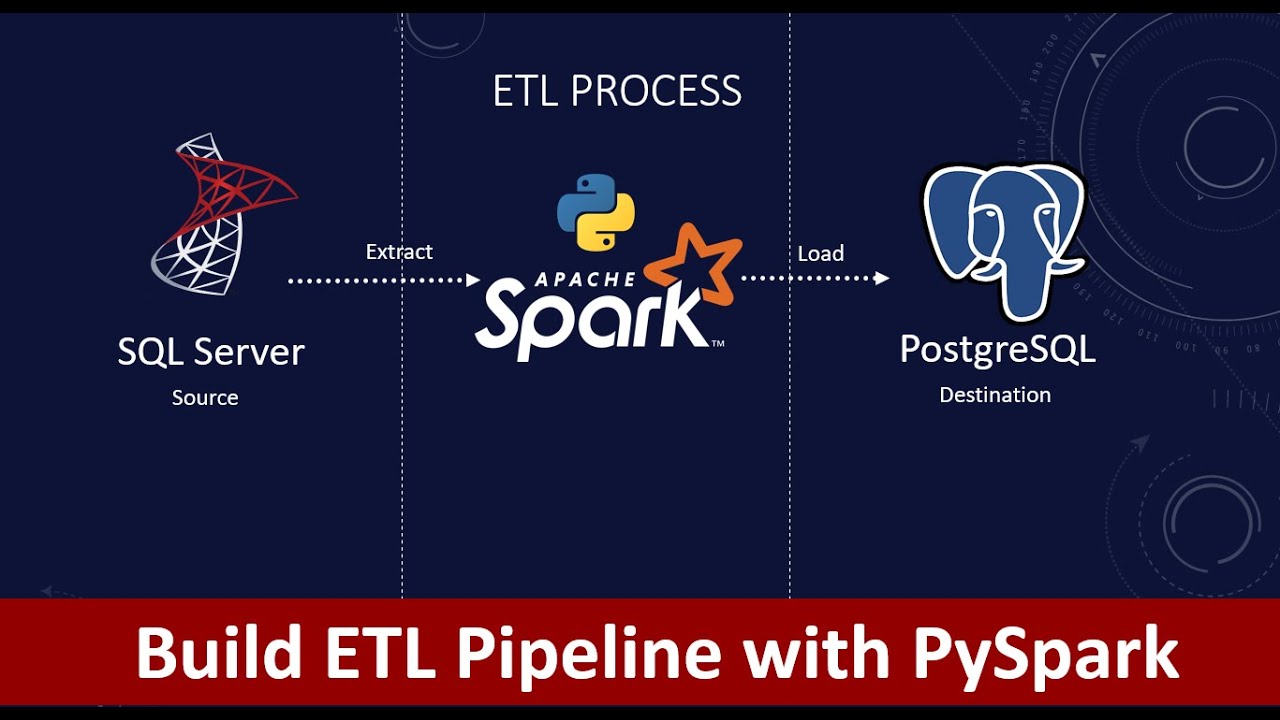

How to Build ETL Pipelines with PySpark? Build ETL pipelines on

Quick Guide to Building an ETL Pipeline Process

What is ETL Pipeline? Process, Considerations, and Examples

How to build and automate a python ETL pipeline with airflow on AWS EC2

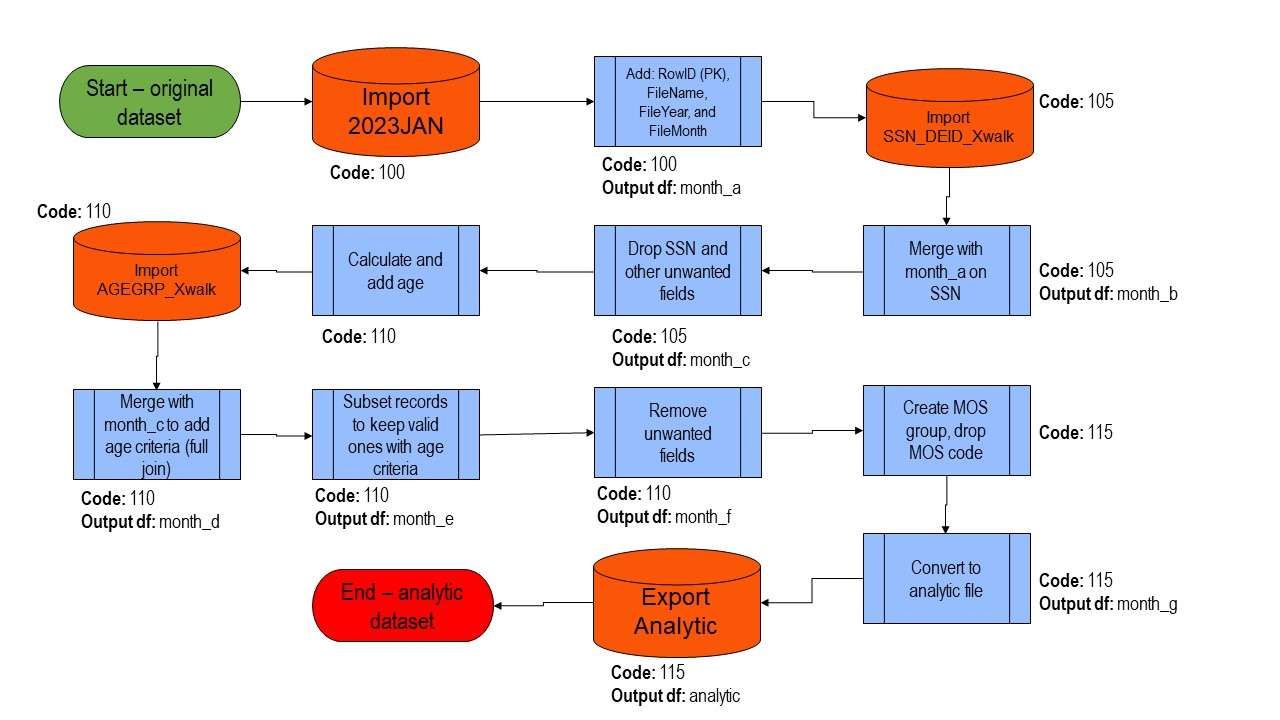

ETL Pipeline Documentation Here are my Tips and Tricks! DethWench

A Complete Guide on Building an ETL Pipeline for Beginners Analytics

How to build and automate a ETL pipeline with AWS airflow AWS EndTo

The Etl (Extract, Transform, Load) Pipeline Is The Backbone Of Data Processing And Analysis.

Building An Etl Pipeline Is Crucial For Organizations Looking To Effectively Manage And Analyze Their Data.

A Unified Pipeline That Includes An Integration Layer (Handling Ingestion From Different Sources And Filtering), A Core Processing.

Discussing How To Build An Etl Pipeline For Database Migration Using Sql Server Integration Services (Ssis) In Visual Studio 2019.

Related Post: