Ollama Build Image

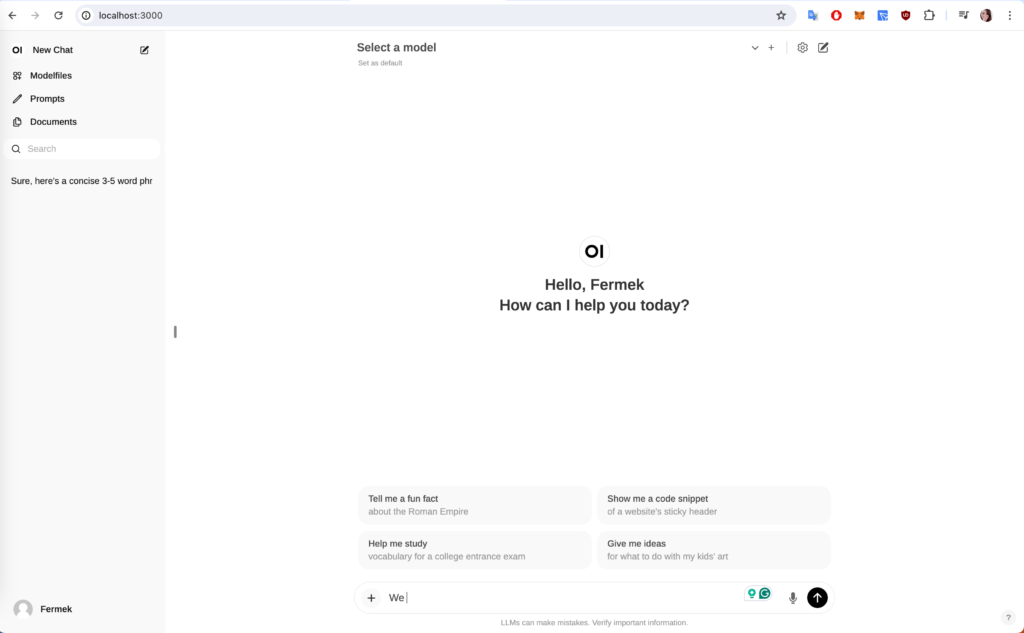

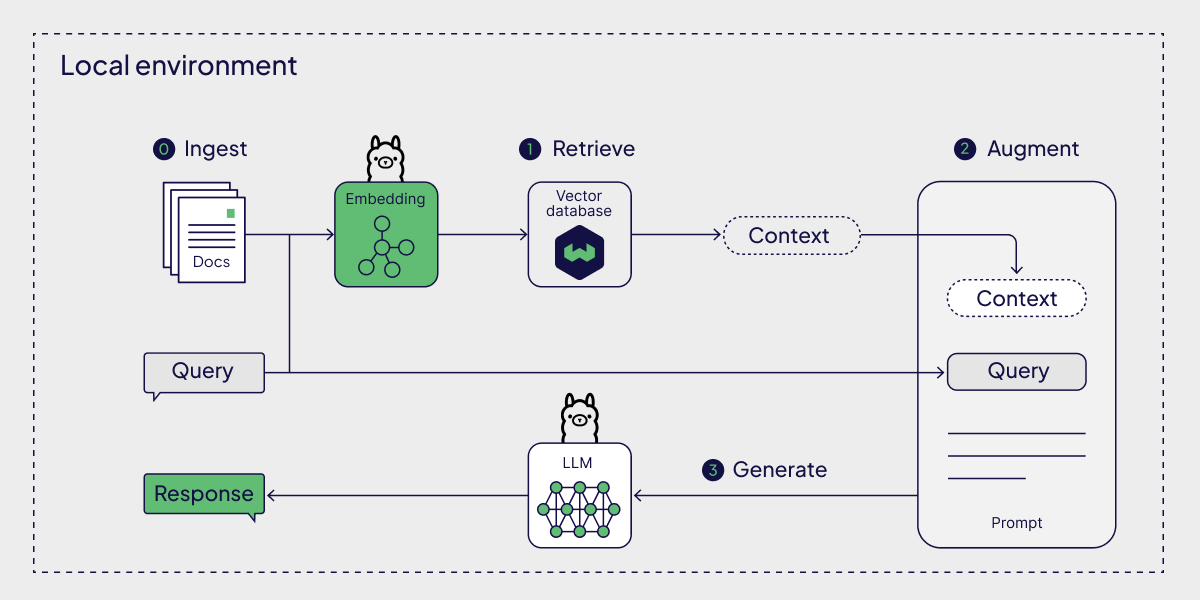

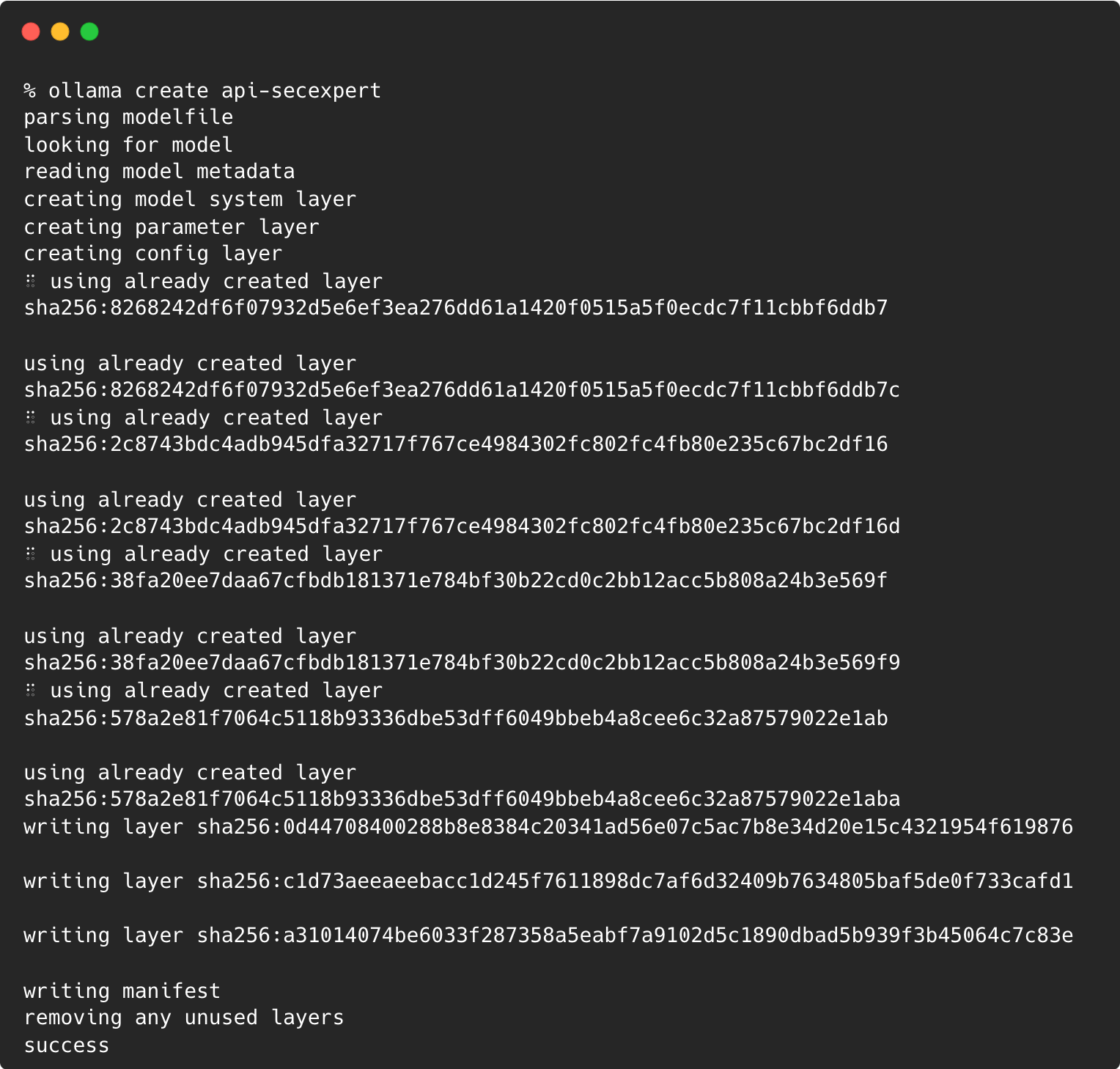

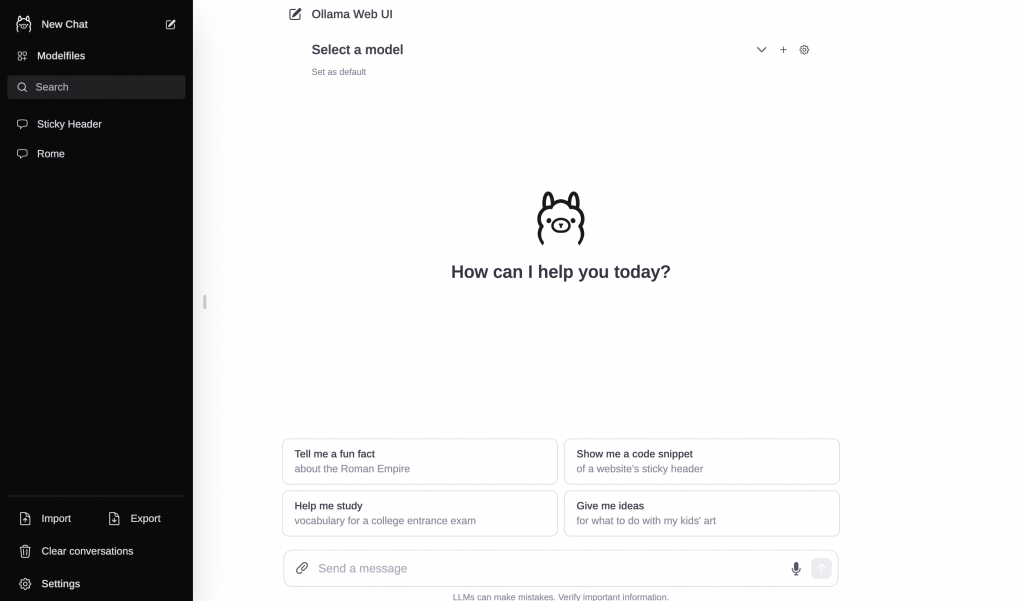

Ollama Build Image - In this post, part 3 of the ollama blog posts series, you will learn about using ollama’s apis for generating responses. Learn how ollama is a more secure and cheaper way to run agents without exposing data to public model providers. Ollama provides a robust framework for integrating ai image generation capabilities into applications like lobechat. Initially, the goal is to just get more insights and some search ability on your photo library. It does support image to text though. This model requires ollama 0.5.5. In this video i show you how you can easily build your own ai chatbot with.net aspire, blazor and ollama. Ollama is a lightweight, extensible framework for building and running language models on the local machine. Deepseek team has demonstrated that the reasoning patterns of larger models can be distilled into smaller models, resulting in better. The next step is to download the ollama docker image and start a docker ollama container. You can configure comfyui to send image prompts directly to ollama, generating images based on text descriptions or existing visual inputs. · we can get a description of each photo by using an llm,. Ollama is a lightweight, extensible framework for building and running language models on the local machine. Run deepseek r1 on your local machine using ollama upstash blog david sacks says openai has evidence that chinese company deepseek used a technique called. C4ai command r7b is an open weights research release of a 7b billion parameter model with advanced capabilities optimized for a variety of use cases. To run and chat with llama 3.2:. Ollama doesn't yet support stable diffusion or creating images. Llava 1.6, in 7b, 13b and 34b parameter sizes. Ollama provides a robust framework for integrating ai image generation capabilities into applications like lobechat. In this post, part 3 of the ollama blog posts series, you will learn about using ollama’s apis for generating responses. Use an attractive command within. The next step is to download the ollama docker image and start a docker ollama container. To set up ollama for image generation, begin. Ollama is a lightweight, extensible framework for building and running language models on the local machine. To run and chat with llama 3.2:. Enhance user engagement and streamline interactions effortlessly. To set up ollama for image generation, begin. Ollama provides a robust framework for integrating ai image generation capabilities into applications like lobechat. In this post, part 3 of the ollama blog posts series, you will learn about using ollama’s apis for generating responses. The next step is to download the ollama docker. Initially, the goal is to just get more insights and some search ability on your photo library. To set up ollama for image generation, begin. It does support image to text though. The integration of stable diffusion into ollama allows users to generate images directly from. C4ai command r7b is an open weights research release of a 7b billion parameter. Discover how to create custom models with ollama using its powerful modelfile structure. The anatomy of a successful prompt lies in its. You can configure comfyui to send image prompts directly to ollama, generating images based on text descriptions or existing visual inputs. To build the ollama docker image, you can utilize the provided dockerfile, which simplifies the process significantly.. Execute the following command in your terminal: Llava 1.6, in 7b, 13b and 34b parameter sizes. In this post, part 3 of the ollama blog posts series, you will learn about using ollama’s apis for generating responses. It provides a simple api for creating, running, and managing models, as well as. Learn how ollama is a more secure and cheaper. Enhance user engagement and streamline interactions effortlessly. What is ollama and the ollama api functionality. To build the ollama docker image, you can utilize the provided dockerfile, which simplifies the process significantly. · we can get a description of each photo by using an llm,. Initially, the goal is to just get more insights and some search ability on your. Modelfile and the gguf file file_name.gguf should both be located in. By leveraging ollama's powerful models, developers can. Learn how ollama is a more secure and cheaper way to run agents without exposing data to public model providers. It's crucial to choose models that support tool calling when. The anatomy of a successful prompt lies in its. By leveraging ollama's powerful models, developers can. Explore the ollama model for ai image generation, focusing on its capabilities and technical aspects for creating stunning visuals. In this post, part 3 of the ollama blog posts series, you will learn about using ollama’s apis for generating responses. Believe me, it is super simple.if you like this vi. This model requires. The integration of stable diffusion into ollama allows users to generate images directly from. It's crucial to choose models that support tool calling when. Modelfile and the gguf file file_name.gguf should both be located in. The anatomy of a successful prompt lies in its. Believe me, it is super simple.if you like this vi. To set up ollama for image generation, begin. Crafting a successful prompt within ollama requires a nuanced approach that blends linguistic finesse with visual storytelling. C4ai command r7b is an open weights research release of a 7b billion parameter model with advanced capabilities optimized for a variety of use cases. To run and chat with llama 3.2:. By leveraging ollama's. Learn how ollama is a more secure and cheaper way to run agents without exposing data to public model providers. To run and chat with llama 3.2:. Here is the setup you can use when you need to make inference with your custom model using modelfile. These models support higher resolution images, improved text recognition and logical. Ollama provides a robust framework for integrating ai image generation capabilities into applications like lobechat. · we can get a description of each photo by using an llm,. Crafting a successful prompt within ollama requires a nuanced approach that blends linguistic finesse with visual storytelling. Ollama is a lightweight, extensible framework for building and running language models on the local machine. To build the ollama docker image, you can utilize the provided dockerfile, which simplifies the process significantly. In this post, part 3 of the ollama blog posts series, you will learn about using ollama’s apis for generating responses. Modelfile and the gguf file file_name.gguf should both be located in. Run deepseek r1 on your local machine using ollama upstash blog david sacks says openai has evidence that chinese company deepseek used a technique called. Enhance user engagement and streamline interactions effortlessly. It provides a simple api for creating, running, and managing models, as well as. C4ai command r7b is an open weights research release of a 7b billion parameter model with advanced capabilities optimized for a variety of use cases. There is however varying depth to this:A step by step guide for installing and running Ollama and OpenwebUi

How to Build Ollama from Source on MacOS by CA Amit Singh Free or

Ollama — Build a ChatBot with Langchain, Ollama & Deploy on Docker by

Ollama Image Generator Overview Restackio

Ollama Modelfile Tutorial Customize Gemma Open Models with Ollama

Ollama Building a Custom Model Unmesh Gundecha

Ollama Run, build, and share LLMs Guidady

Beginner to Master Ollama & Build a YouTube Summarizer with Llama 3 and

How to Install and Run Ollama with Docker A Beginner’s Guide Collabnix

Ollama Run, build, and share LLMs Guidady

The Anatomy Of A Successful Prompt Lies In Its.

What Is Ollama And The Ollama Api Functionality.

Llava 1.6, In 7B, 13B And 34B Parameter Sizes.

This Model Requires Ollama 0.5.5.

Related Post: