Otx Build Yolo

Otx Build Yolo - Learn how to quantize yolox models with onnx runtime and tensorrt for int8 inference. In this article, i want to walk you through the implementation of a pipeline that handles the full optimization of pytorch models to tensorrt targets and generates the triton. If you want to rebuild your current workspace by running otx build with other parameters, it’s better to delete the original workplace before that to prevent mistakes. As it was discussed before, yolo v10 code is designed on top of ultralytics library and has similar interface with yolo v8 (you can check. Over time, various yolo versions have been released, each introducing innovations to improve performance, reduce latency, and expand application areas. We’re rolling up our sleeves and. Tutorial on building yolo v3 detector from scratch detailing how to create the network architecture from a configuration file, load the weights and designing input/output pipelines. This won’t be just another theoretical dive; Export pytorch model to openvino ir format#. In this guide, i’ll walk you through building a yolo object detector from scratch using pytorch. The overall model flowchart is as follows: We can build openvino™ training extensions workspace with the following cli. Export pytorch model to openvino ir format#. The converter will turn the bounding box to the vector. Learn how to quantize yolox models with onnx runtime and tensorrt for int8 inference. This post is part of the following series: We can build openvino™ training extensions workspace with the following cli. In yolov7, the prediction will be anchor, and in yolov9, it will predict vector. Tutorial on building yolo v3 detector from scratch detailing how to create the network architecture from a configuration file, load the weights and designing input/output pipelines. In this guide, i’ll walk you through building a yolo object detector from scratch using pytorch. Export pytorch model to openvino ir format#. The converter will turn the bounding box to the vector. Over time, various yolo versions have been released, each introducing innovations to improve performance, reduce latency, and expand application areas. The overall model flowchart is as follows: We’re rolling up our sleeves and. Tutorial on building yolo v3 detector from scratch detailing how to create the network architecture from a configuration file, load the weights and designing input/output pipelines. We can build openvino™ training extensions workspace with the following cli. If you want to rebuild your current workspace by running otx build with other parameters, it’s better to delete the original workplace before. Export pytorch model to openvino ir format#. As it was discussed before, yolo v10 code is designed on top of ultralytics library and has similar interface with yolo v8 (you can check. If you want to rebuild your current workspace by running otx build with other parameters, it’s better to delete the original workplace before that to prevent mistakes. We. This post is part of the following series: Over time, various yolo versions have been released, each introducing innovations to improve performance, reduce latency, and expand application areas. Learn how to quantize yolox models with onnx runtime and tensorrt for int8 inference. We can build openvino™ training extensions workspace with the following cli. If you want to rebuild your current. In yolov7, the prediction will be anchor, and in yolov9, it will predict vector. The converter will turn the bounding box to the vector. This post is part of the following series: We’re rolling up our sleeves and. Tutorial on building yolo v3 detector from scratch detailing how to create the network architecture from a configuration file, load the weights. This won’t be just another theoretical dive; Over time, various yolo versions have been released, each introducing innovations to improve performance, reduce latency, and expand application areas. Export pytorch model to openvino ir format#. Learn how to quantize yolox models with onnx runtime and tensorrt for int8 inference. We can build openvino™ training extensions workspace with the following cli. Export pytorch model to openvino ir format#. We’re rolling up our sleeves and. Learn how to quantize yolox models with onnx runtime and tensorrt for int8 inference. Tutorial on building yolo v3 detector from scratch detailing how to create the network architecture from a configuration file, load the weights and designing input/output pipelines. The overall model flowchart is as follows: We can build openvino™ training extensions workspace with the following cli. Learn how to quantize yolox models with onnx runtime and tensorrt for int8 inference. As it was discussed before, yolo v10 code is designed on top of ultralytics library and has similar interface with yolo v8 (you can check. The overall model flowchart is as follows: In this article,. We can build openvino™ training extensions workspace with the following cli. If you want to rebuild your current workspace by running otx build with other parameters, it’s better to delete the original workplace before that to prevent mistakes. Tutorial on building yolo v3 detector from scratch detailing how to create the network architecture from a configuration file, load the weights. The overall model flowchart is as follows: In this article, i want to walk you through the implementation of a pipeline that handles the full optimization of pytorch models to tensorrt targets and generates the triton. The converter will turn the bounding box to the vector. This post is part of the following series: Over time, various yolo versions have. This won’t be just another theoretical dive; In this article, i want to walk you through the implementation of a pipeline that handles the full optimization of pytorch models to tensorrt targets and generates the triton. If you want to rebuild your current workspace by running otx build with other parameters, it’s better to delete the original workplace before that to prevent mistakes. As it was discussed before, yolo v10 code is designed on top of ultralytics library and has similar interface with yolo v8 (you can check. The converter will turn the bounding box to the vector. In this guide, i’ll walk you through building a yolo object detector from scratch using pytorch. Tutorial on building yolo v3 detector from scratch detailing how to create the network architecture from a configuration file, load the weights and designing input/output pipelines. We can build openvino™ training extensions workspace with the following cli. We’re rolling up our sleeves and. On this page, we show how to train, validate, export and optimize atss model on wgisd public dataset. To learn more about object detection task, refer to object detection. This post is part of the following series: Over time, various yolo versions have been released, each introducing innovations to improve performance, reduce latency, and expand application areas. Learn how to quantize yolox models with onnx runtime and tensorrt for int8 inference.GitHub Praveen76/BuildYOLOModelfromscratch

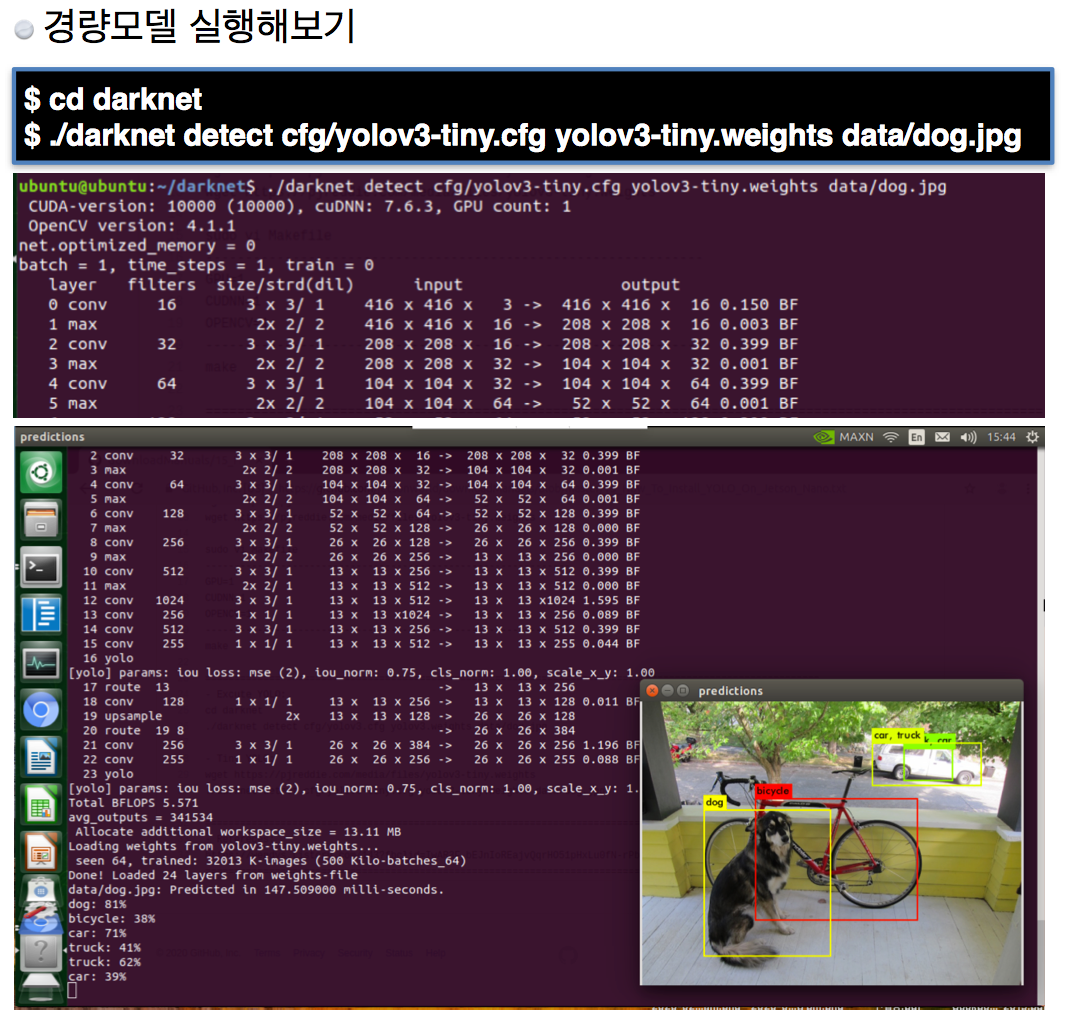

GitHub jugfk/buildYOLO 젯슨나노에서 YOLO v3 (GPU + CUDNN + OpneCV 전용) 설치하기

GitHub jugfk/buildYOLO 젯슨나노에서 YOLO v3 (GPU + CUDNN + OpneCV 전용) 설치하기

Official YOLO v7 Custom Object Detection Tutorial Windows & Linux

Mastering YOLO V8 Object Detection and Hugging Face Deployment YouTube

YOLO v7 The Most Powerful Object Detector YouTube

YOLOv4 Object Detection Crash Course YOLO v4 how it works and how to

muqtadar08/yolo_v8 API reference

Unveiling OTX The Next Frontier in Web 3.0 Powered by AI by OTX

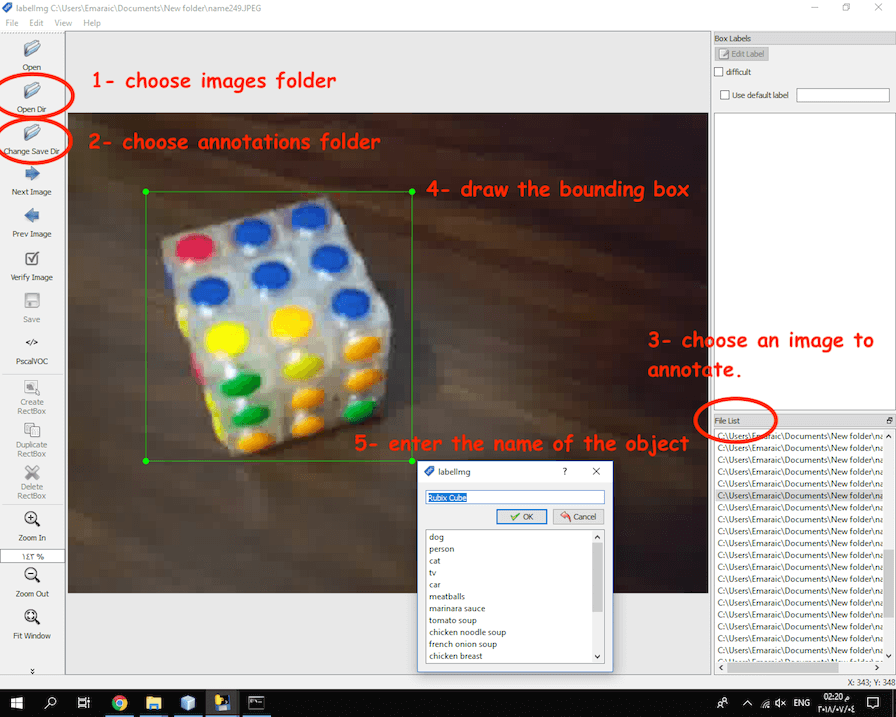

Emaraic How to build a custom object detector using Yolo

We Can Build Openvino™ Training Extensions Workspace With The Following Cli.

The Overall Model Flowchart Is As Follows:

Export Pytorch Model To Openvino Ir Format#.

In Yolov7, The Prediction Will Be Anchor, And In Yolov9, It Will Predict Vector.

Related Post: