Serverless Memory Metrics On Serverless Build

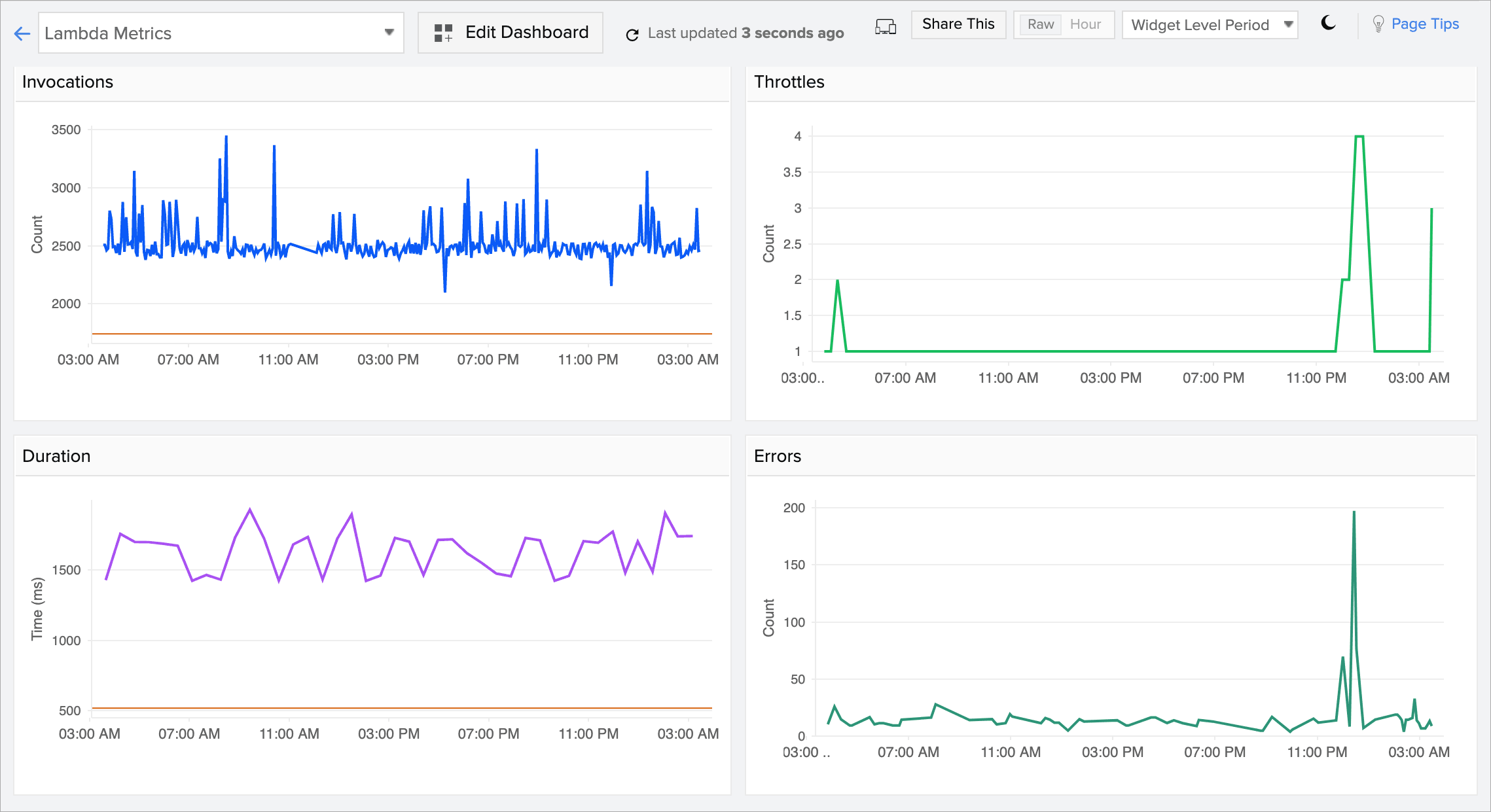

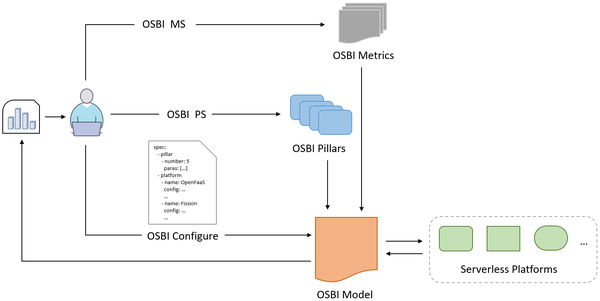

Serverless Memory Metrics On Serverless Build - Overview of the metrics feature in serverless dashboard, including setup, available charts, and filters. These are the key metrics displayed for docker containers. Build, deploy, monitor, update, and troubleshoot serverless applications with ease. There's also this tool here that can help with perf and. First, funcmem predicts and reduces excessive memory requirements for serverless functions. Popular tools like node.js and python often face delays when starting up, hog. As your serverless application gets more serious, you will want to track metrics more closely using a tool like datadog, iopipe, or honeycomb. Learn about key container metrics displayed in the infrastructure ui: It’s a holistic process, from optimizing lambda memory to caching, choosing the right. When i profile my functions locally, the max heap used is ~ 40mb. A benchmark suit that can reflect the critical metrics of serverless. Memento alleviates the overheads of serverless memory management by introducing two key mechanisms: When i profile my functions locally, the max heap used is ~ 40mb. These metrics effectively measure scalability. There's also this tool here that can help with perf and. Popular tools like node.js and python often face delays when starting up, hog. First, funcmem predicts and reduces excessive memory requirements for serverless functions. It’s a holistic process, from optimizing lambda memory to caching, choosing the right. By setting a higher base capacity, you can improve the overall performance of your queries, especially for data processing jobs that tend to consume a lot of compute resources. Configuring resources is essential for ensuring your serverless applications are robust, secure, efficient, and scalable. Serverless queue processors like aws lambda often exist in architectures where they pull messages from queues such as amazon simple queue service (amazon sqs) and. Configuring resources is essential for ensuring your serverless applications are robust, secure, efficient, and scalable. As your serverless application gets more serious, you will want to track metrics more closely using a tool like datadog,. My (unzipped) bundle is ~35mb, so i would. Processors that can complete inference on the full dataset are strongly preferred to those that cannot due to their memory. Build, deploy, monitor, update, and troubleshoot serverless applications with ease. Optimizing serverless performance is about more than just reducing cold starts or picking the right runtime. As your serverless application gets more. Each serverless platform offers different tools and. When i profile my functions locally, the max heap used is ~ 40mb. As your serverless application gets more serious, you will want to track metrics more closely using a tool like datadog, iopipe, or honeycomb. These metrics effectively measure scalability. It’s a holistic process, from optimizing lambda memory to caching, choosing the. Optimizing serverless performance is about more than just reducing cold starts or picking the right runtime. First, funcmem predicts and reduces excessive memory requirements for serverless functions. My (unzipped) bundle is ~35mb, so i would. Serverless queue processors like aws lambda often exist in architectures where they pull messages from queues such as amazon simple queue service (amazon sqs) and.. Serverless computing has changed how teams build apps that scale effortlessly. Each serverless platform offers different tools and. Second, it dynamically reschedules functions within an invoker, creating. But for quick and easy monitoring, it's hard. Overview of the metrics feature in serverless dashboard, including setup, available charts, and filters. Serverless architectures are stateless by nature, which can complicate workflows requiring a persistent state. Optimizing serverless performance is about more than just reducing cold starts or picking the right runtime. Average cpu for the container. Each serverless platform offers different tools and. But for quick and easy monitoring, it's hard. These metrics effectively measure scalability. Elastic observability serverless allows you to visualize infrastructure metrics to help diagnose problematic spikes, identify high resource utilization, automatically discover and track pods,. Popular tools like node.js and python often face delays when starting up, hog. My (unzipped) bundle is ~35mb, so i would. First, funcmem predicts and reduces excessive memory requirements for serverless functions. Memento alleviates the overheads of serverless memory management by introducing two key mechanisms: Overview of the metrics feature in serverless dashboard, including setup, available charts, and filters. A benchmark suit that can reflect the critical metrics of serverless. For a project processing a large dataset, i initially attempted to. These are the key metrics displayed for docker containers. It’s a holistic process, from optimizing lambda memory to caching, choosing the right. Serverless queue processors like aws lambda often exist in architectures where they pull messages from queues such as amazon simple queue service (amazon sqs) and. Elastic observability serverless allows you to visualize infrastructure metrics to help diagnose problematic spikes, identify high resource utilization, automatically discover and track. These are the key metrics displayed for docker containers. But for quick and easy monitoring, it's hard. Overview of the metrics feature in serverless dashboard, including setup, available charts, and filters. I have been struggling to understand lambda memory usage. Popular tools like node.js and python often face delays when starting up, hog. Build, deploy, monitor, update, and troubleshoot serverless applications with ease. Serverless queue processors like aws lambda often exist in architectures where they pull messages from queues such as amazon simple queue service (amazon sqs) and. Each serverless platform offers different tools and. These are the key metrics displayed for docker containers. Learn about key container metrics displayed in the infrastructure ui: There's also this tool here that can help with perf and. Elastic observability serverless allows you to visualize infrastructure metrics to help diagnose problematic spikes, identify high resource utilization, automatically discover and track pods,. My (unzipped) bundle is ~35mb, so i would. Overview of the metrics feature in serverless dashboard, including setup, available charts, and filters. Second, it dynamically reschedules functions within an invoker, creating. You can use aws cloudwatch to log and monitor various metrics for your backend services (cpu utilization, data transfer, memory, etc.). By setting a higher base capacity, you can improve the overall performance of your queries, especially for data processing jobs that tend to consume a lot of compute resources. First, funcmem predicts and reduces excessive memory requirements for serverless functions. Processors that can complete inference on the full dataset are strongly preferred to those that cannot due to their memory. Configuring resources is essential for ensuring your serverless applications are robust, secure, efficient, and scalable. Optimizing serverless performance is about more than just reducing cold starts or picking the right runtime.Building wellarchitected serverless applications Understanding

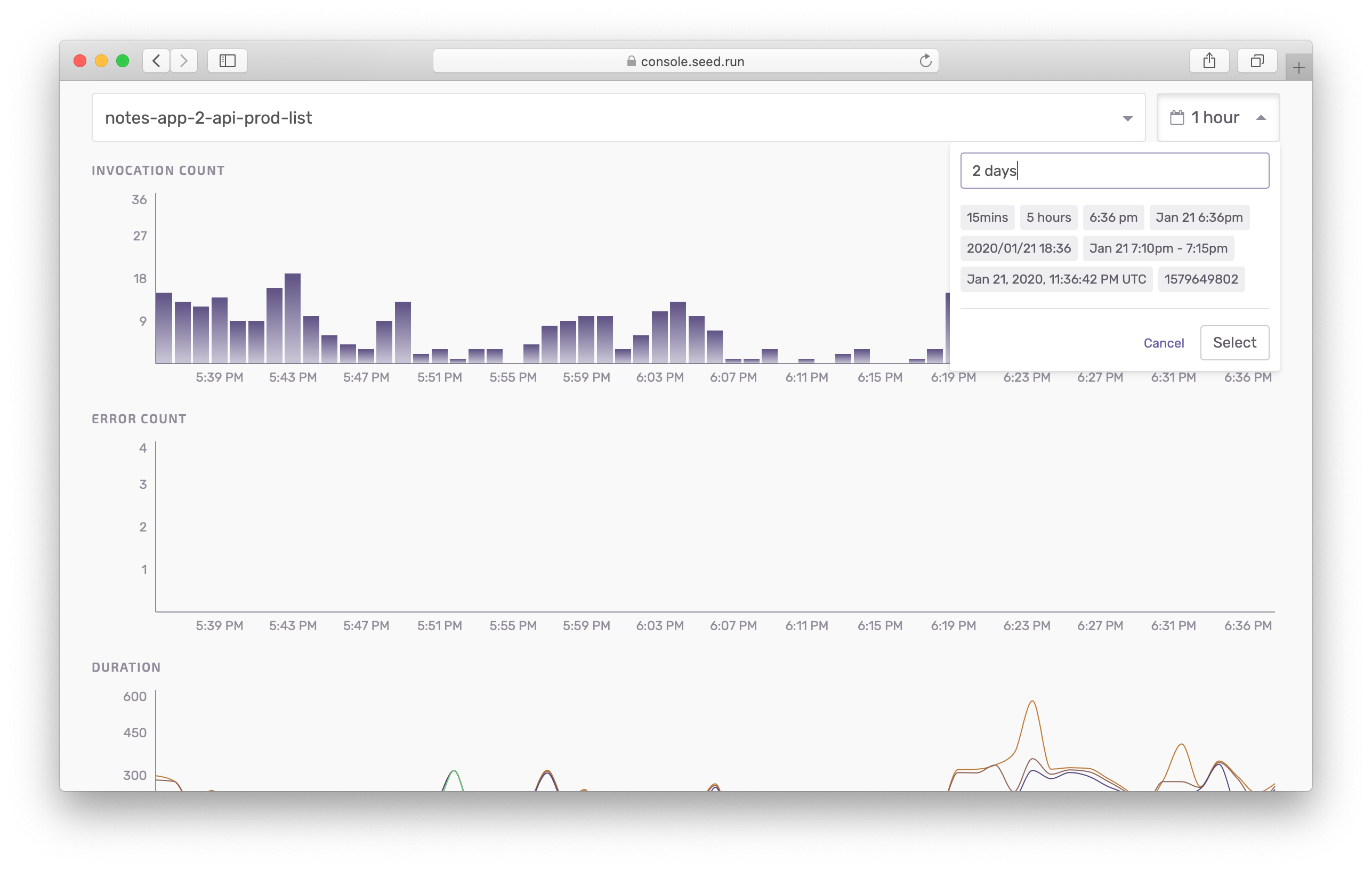

New and Improved Serverless Metrics

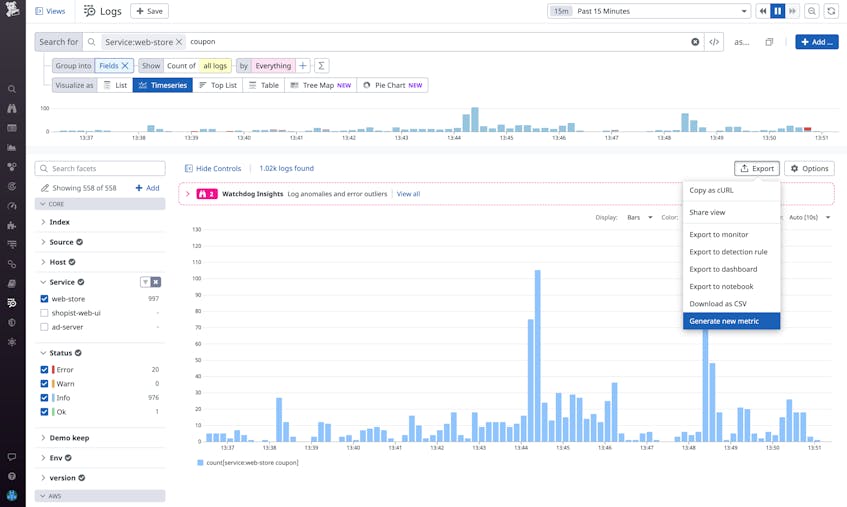

Serverless Monitoring Solutions Splunk

Visualising Serverless Metrics With Grafana Dashboards • notes on software.

Monitor Custom Serverless Metrics With the Datadog Lambda Extension

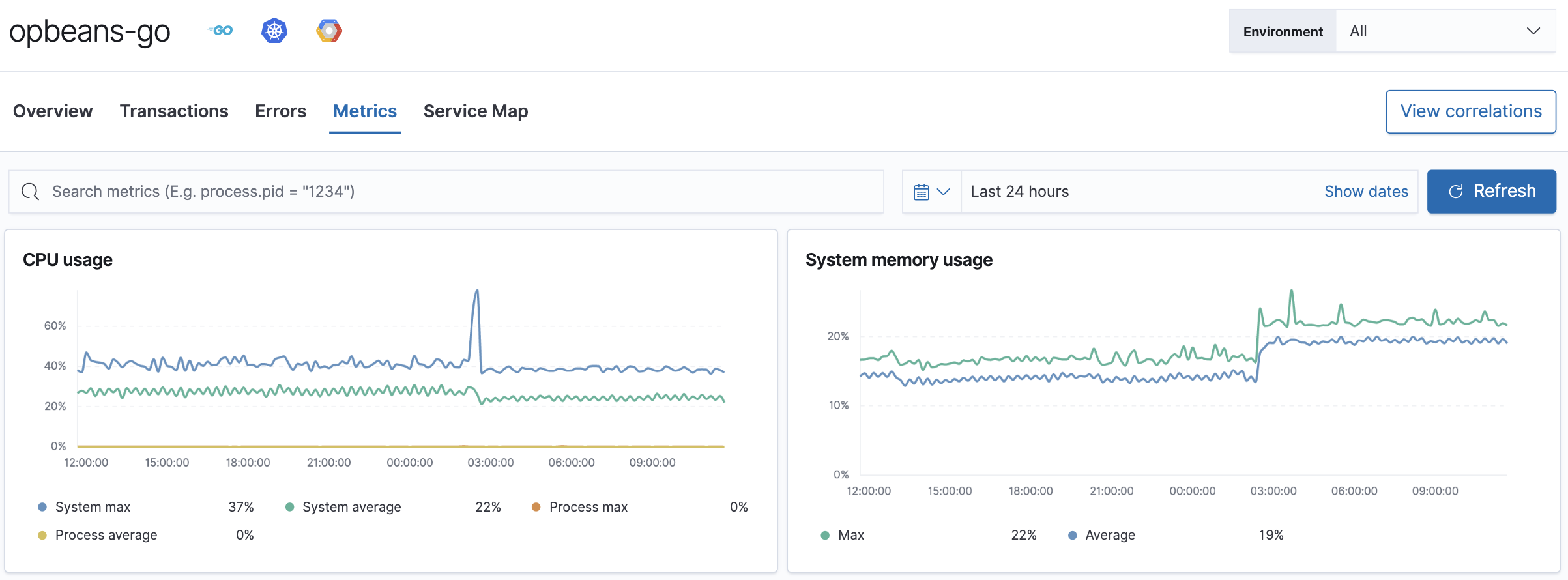

Metrics Serverless Elastic

Serverless Monitoring Site24x7

Open Serverless Benchmark Initiative 华为云联合上海交大发布ServerlessBench 2.0 知乎

Building Serverless Observability Tools With Custom Metrics and

Serverless Analytics Metrics, Collection and Visibility The New Stack

Average Cpu For The Container.

Serverless Computing Has Been Hyped As The Ultimate Solution To Scalability And Elasticity, But Many Who Adopted It Were Quickly Disappointed By Rising Costs And Maintenance.

For A Project Processing A Large Dataset, I Initially Attempted To.

It’s A Holistic Process, From Optimizing Lambda Memory To Caching, Choosing The Right.

Related Post: