Sparksession Builder

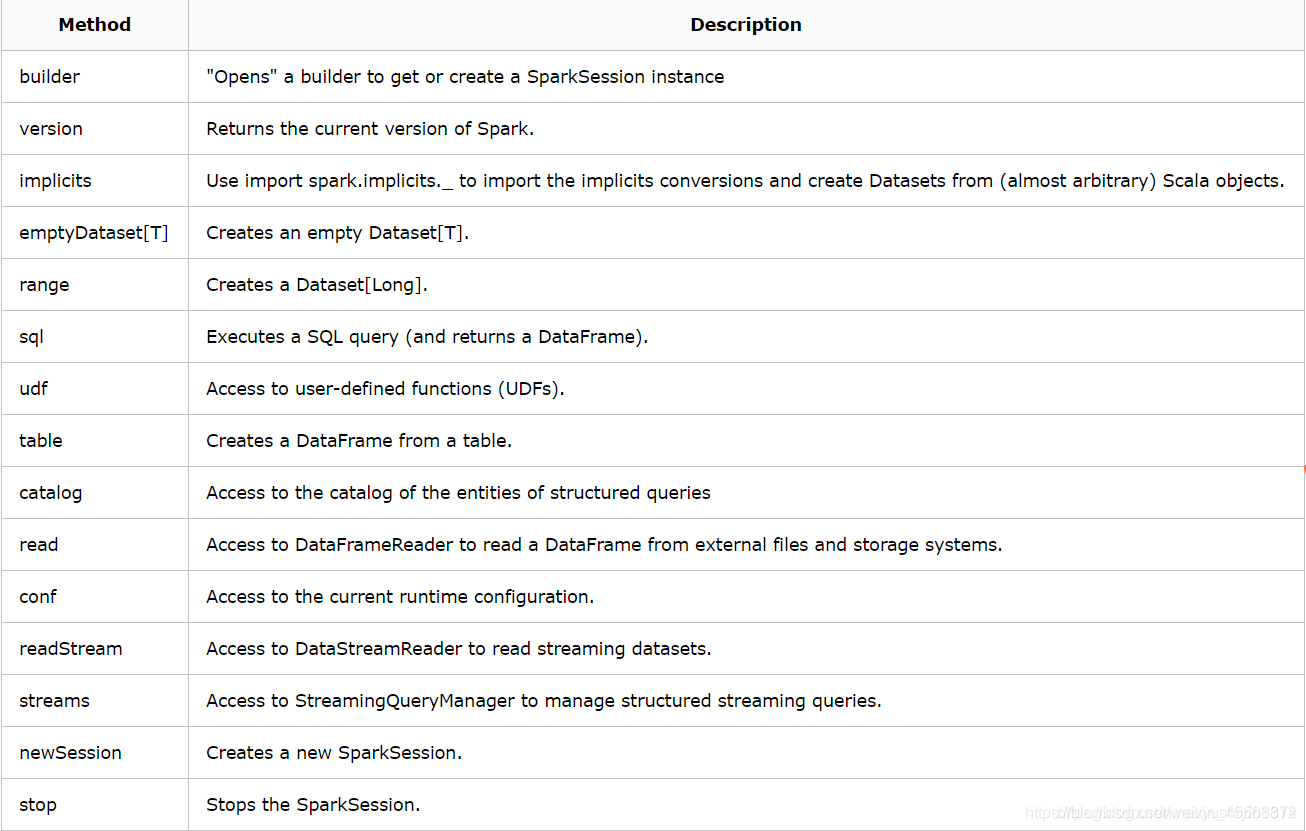

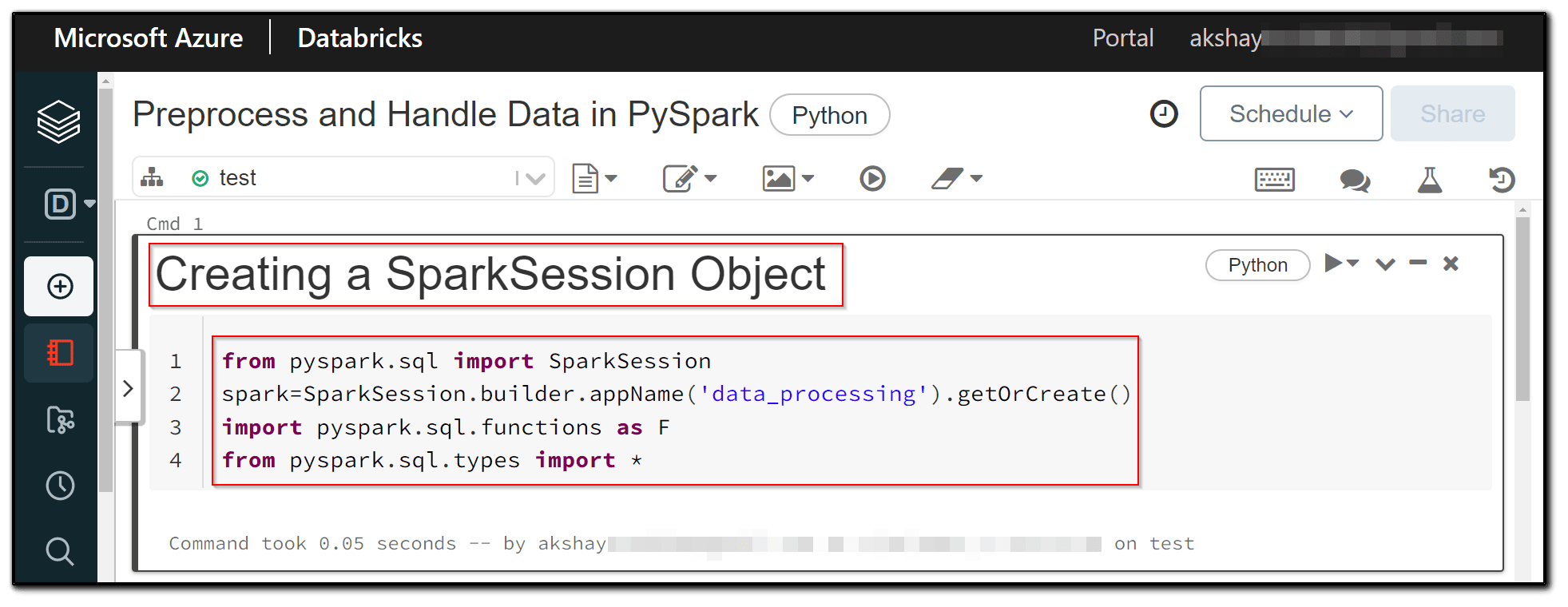

Sparksession Builder - Learn how to create and configure a sparksession using the sparksession.builder class. Handling bad records is crucial to ensure the accuracy and reliability of data processing and analysis. This allows a user to add analyzer rules, optimizer rules, planning strategies or a customized parser. Convert a baserelation created for external data sources into a dataframe. Sets a name for the application, which will be shown in the spark web ui. Builder is available using the builder object method of a. To create a basic sparksession, just use sparksession.builder(): Creating and reusing the sparksession with pyspark. From spark 2.0, sparksession provides a common entry point for a spark application. Dans certains tutoriels, vous verrez l’utilisation d’un objet sparkcontext. This guide will walk you through the process of setting up a spark session in pyspark. As a spark developer, you create a sparksession using. It is one of the very first objects you create while developing a spark sql application. Creating and reusing the sparksession with pyspark. Sparksession is the entry point to spark sql. Learn how to create and use a spark session with the dataset and dataframe api. Instead of sparkcontext, hivecontext, sqlcontext, everything is now within a sparksession. To create a basic sparksession, just use sparksession.builder(): Spark as sparksession spark.read.csv() self.spark_session = sparksession.build() self.i. This post explains how to create a sparksession with getorcreate and how to reuse the sparksession with getactivesession. This guide will walk you through the process of setting up a spark session in pyspark. Creating and reusing the sparksession with pyspark. Learn how to create and use a spark session with the dataset and dataframe api. The lifetime of this temporary table is tied to the sparksession that was used to create this dataframe. Builder is available using. The builder can also be used to create a new session: Corrupted records are data records that have been damaged or. Creating a spark session is a crucial step when working with pyspark for big data processing tasks. This post explains how to create a sparksession with getorcreate and how to reuse the sparksession with getactivesession. Builder is available using. Sparksession is the entry point to spark sql. The lifetime of this temporary table is tied to the sparksession that was used to create this dataframe. As a spark developer, you create a sparksession using. Gets the current sparksession or creates a new one. Creates a local temporary view with this dataframe. Creating a spark session is a crucial step when working with pyspark for big data processing tasks. Gets the current sparksession or creates a new one. Inject extensions into the sparksession. Spark as sparksession spark.read.csv() self.spark_session = sparksession.build() self.i. Sparksession is the entry point to spark sql. This post explains how to create a sparksession with getorcreate and how to reuse the sparksession with getactivesession. Gets the current sparksession or creates a new one. From spark 2.0, sparksession provides a common entry point for a spark application. Clears the active sparksession for. See the methods, parameters, and examples for setting appname, config, master, enablehivesupport,. Dans certains tutoriels, vous verrez l’utilisation d’un objet sparkcontext. You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file. To create a basic sparksession, just use sparksession.builder(): Learn how to create and configure a sparksession using the sparksession.builder class. Gets the current sparksession or creates. From spark 2.0, sparksession provides a common entry point for a spark application. Sparksession is the entry point to spark sql. Convert a baserelation created for external data sources into a dataframe. Clears the active sparksession for. Creating a spark session is a crucial step when working with pyspark for big data processing tasks. It is one of the very first objects you create while developing a spark sql application. The lifetime of this temporary table is tied to the sparksession that was used to create this dataframe. This allows a user to add analyzer rules, optimizer rules, planning strategies or a customized parser. Dans certains tutoriels, vous verrez l’utilisation d’un objet sparkcontext. Inject. Corrupted records are data records that have been damaged or. Instead of sparkcontext, hivecontext, sqlcontext, everything is now within a sparksession. Learn how to create and use a spark session with the dataset and dataframe api. From spark 2.0, sparksession provides a common entry point for a spark application. Builder is available using the builder object method of a. This allows a user to add analyzer rules, optimizer rules, planning strategies or a customized parser. Builder is available using the builder object method of a. The lifetime of this temporary table is tied to the sparksession that was used to create this dataframe. Gets the current sparksession or creates a new one. Cet objet a été encapsulé dans l’objet. Corrupted records are data records that have been damaged or. Spark as sparksession spark.read.csv() self.spark_session = sparksession.build() self.i. To create a basic sparksession, just use sparksession.builder(): Sparksession is the entry point to spark sql. Creates a local temporary view with this dataframe. Gets the current sparksession or creates a new one. See the methods and attributes of sparksession and sparksession.builder, and how to connect to. This allows a user to add analyzer rules, optimizer rules, planning strategies or a customized parser. Convert a baserelation created for external data sources into a dataframe. Sets a name for the application, which will be shown in the spark web ui. Creates a sparksession.builder for constructing a sparksession. Learn how to create and use a spark session with the dataset and dataframe api. This post explains how to create a sparksession with getorcreate and how to reuse the sparksession with getactivesession. The builder can also be used to create a new session: It is one of the very first objects you create while developing a spark sql application. Handling bad records is crucial to ensure the accuracy and reliability of data processing and analysis.PySpark Session3 SparkSession Vs SparkContext Bigdata Online

PySpark Create a SparkSession

Create SparkSession in PySpark PySpark Tutorial for Beginners YouTube

PySpark What is SparkSession? Spark By {Examples}

SparkSession的简介和方法详解_sparksession.builderCSDN博客

Preprocess and Handle Data in PySpark Azure DataBricks

Spark SQL(一):核心类、Spark Session APIs、Configuration、Input and Output

Spark Concepts pyspark.sql.SparkSession.builder.config Quick Start

Spark SQL快速入门(基础)阿里云开发者社区

Apache Spark >> SparkSession, Create DataFrame & That's it ! Code Snippets

The Lifetime Of This Temporary Table Is Tied To The Sparksession That Was Used To Create This Dataframe.

This Guide Will Walk You Through The Process Of Setting Up A Spark Session In Pyspark.

Cet Objet A Été Encapsulé Dans L’objet Sparksession À Partir De La Version 2.0 De Spark.

To Create A Spark Session, You Should Use Sparksession.builder Attribute.

Related Post: