Building Rag Agents With Llms

Building Rag Agents With Llms - Upon completion of this building rag agents with llms training course, participants can: Here’s how you can set it up: It likely performs better with advanced commercial llms like gpt4o. Azure ai foundry, use a vector index and retrieval augmentation. This article overviews 10 of the most popular building blocks in langchain you may want to consider if you are keen on building rag systems using this powerful framework. We will build a simple rag system using llamaindex. The badge earner understands the concepts of rag with hugging face, pytorch, and langchain and how to leverage rag to generate responses for different applications such as chatbots. Compose an llm system that can interact predictably with a user by leveraging internal and external reasoning components. Llms were trained at a specific time and on a specific set of data. Learn how you can deploy an agent system in practice and scale up your system to meet the demands of users and customers. It allows developers to define and configure multiple agents, each with. Langchain’s unified interface for adding tools and building agents is great. Design a dialog management and document reasoning. It likely performs better with advanced commercial llms like gpt4o. Llms were trained at a specific time and on a specific set of data. First, you’ll need a function to interact with your local llama instance. Explore the steps involved in building a rag system, the tools required, and best practices for implementation, ensuring that businesses can achieve greater roi through strategic ai. Here’s how you can set it up: Rag allows for responses to be grounded on current and additional data rather than solely depending on the. Install langchain and its dependencies by running the following command: Building rag agents using llms is a challenging yet rewarding process that requires technical skills and knowledge in machine learning and nlp. Our journey begins with an introduction to the workshop, setting the stage for a deep dive into the. This article overviews 10 of the most popular building blocks in langchain you may want to consider if you are. Design a dialog management and document reasoning. Unlock the potential of advanced llm systems with our building rag agents with llms (nvidia) course. Our journey begins with an introduction to the workshop, setting the stage for a deep dive into the. First, you’ll need a function to interact with your local llama instance. Install langchain and its dependencies by running. Here’s how you can set it up: Learn how you can deploy an agent system in practice and scale up your system to meet the demands of users and customers. Azure ai foundry, use a vector index and retrieval augmentation. This article overviews 10 of the most popular building blocks in langchain you may want to consider if you are. It likely performs better with advanced commercial llms like gpt4o. Our journey begins with an introduction to the workshop, setting the stage for a deep dive into the. It allows developers to define and configure multiple agents, each with. Rag allows for responses to be grounded on current and additional data rather than solely depending on the. Langchain is a. Upon completion of this building rag agents with llms training course, participants can: Compose an llm system that can interact predictably with a user by leveraging internal and external reasoning components. This article overviews 10 of the most popular building blocks in langchain you may want to consider if you are keen on building rag systems using this powerful framework.. It likely performs better with advanced commercial llms like gpt4o. Langchain is a python framework designed to work with various llms and vector databases, making it ideal for building rag agents. Azure ai foundry, use a vector index and retrieval augmentation. Compose an llm system that can interact predictably with a user by leveraging internal and external reasoning components. Llms. Upon completion of this building rag agents with llms training course, participants can: Langchain’s unified interface for adding tools and building agents is great. Build your own ai multi agent system. The badge earner understands the concepts of rag with hugging face, pytorch, and langchain and how to leverage rag to generate responses for different applications such as chatbots. Azure. Install langchain and its dependencies by running the following command: Llms were trained at a specific time and on a specific set of data. Langchain is a python framework designed to work with various llms and vector databases, making it ideal for building rag agents. It allows developers to define and configure multiple agents, each with. First, you’ll need a. Design a dialog management and document reasoning. This article overviews 10 of the most popular building blocks in langchain you may want to consider if you are keen on building rag systems using this powerful framework. Llms were trained at a specific time and on a specific set of data. Rag allows for responses to be grounded on current and. Llms were trained at a specific time and on a specific set of data. Upon completion of this building rag agents with llms training course, participants can: Rag allows for responses to be grounded on current and additional data rather than solely depending on the. The badge earner understands the concepts of rag with hugging face, pytorch, and langchain and. This article overviews 10 of the most popular building blocks in langchain you may want to consider if you are keen on building rag systems using this powerful framework. Compose an llm system that can interact predictably with a user by leveraging internal and external reasoning components. Build your own ai multi agent system. First, you’ll need a function to interact with your local llama instance. Langchain is a python framework designed to work with various llms and vector databases, making it ideal for building rag agents. The badge earner understands the concepts of rag with hugging face, pytorch, and langchain and how to leverage rag to generate responses for different applications such as chatbots. Azure ai foundry, use a vector index and retrieval augmentation. Unlock the potential of advanced llm systems with our building rag agents with llms (nvidia) course. Rag allows for responses to be grounded on current and additional data rather than solely depending on the. It likely performs better with advanced commercial llms like gpt4o. Upon completion of this building rag agents with llms training course, participants can: Contribute to sanjanb/building_rag_agents_with_llms development by creating an account on github. Llms were trained at a specific time and on a specific set of data. Here’s how you can set it up: Design a dialog management and document reasoning. Our journey begins with an introduction to the workshop, setting the stage for a deep dive into the.Building Reliable LLM Agent using Advanced Rag Techniques

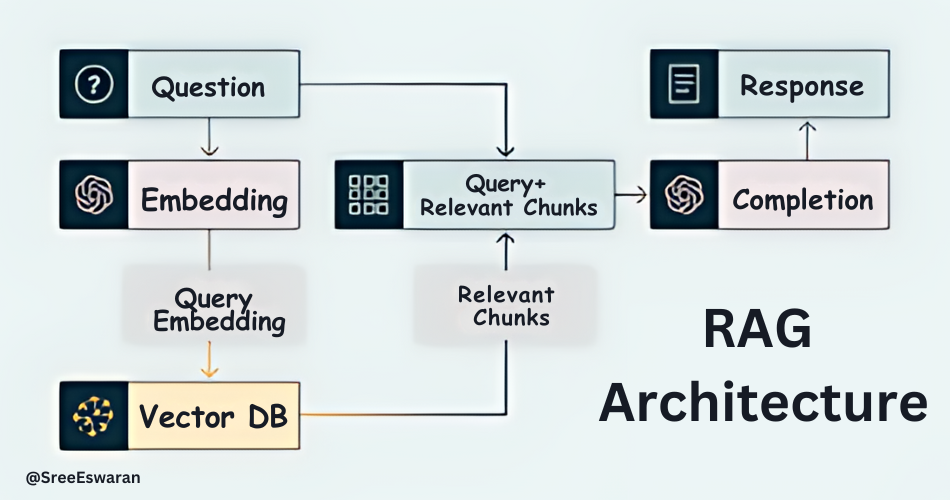

Build RAG Agents using LLMs Stepbystep Guide by Sree Deekshitha

How to Build an LLM RAG Model with Custom Tools and Agents! by

GitHub syedamaann/BuildingRAGAgentsforLLMs Scalable LLM

DLI Building RAG Agents with LLMs AI Foundation Models and

How to Build an LLM RAG Model with Custom Tools and Agents! by

Building LLM Agents for RAG from Scratch and Beyond A Comprehensive

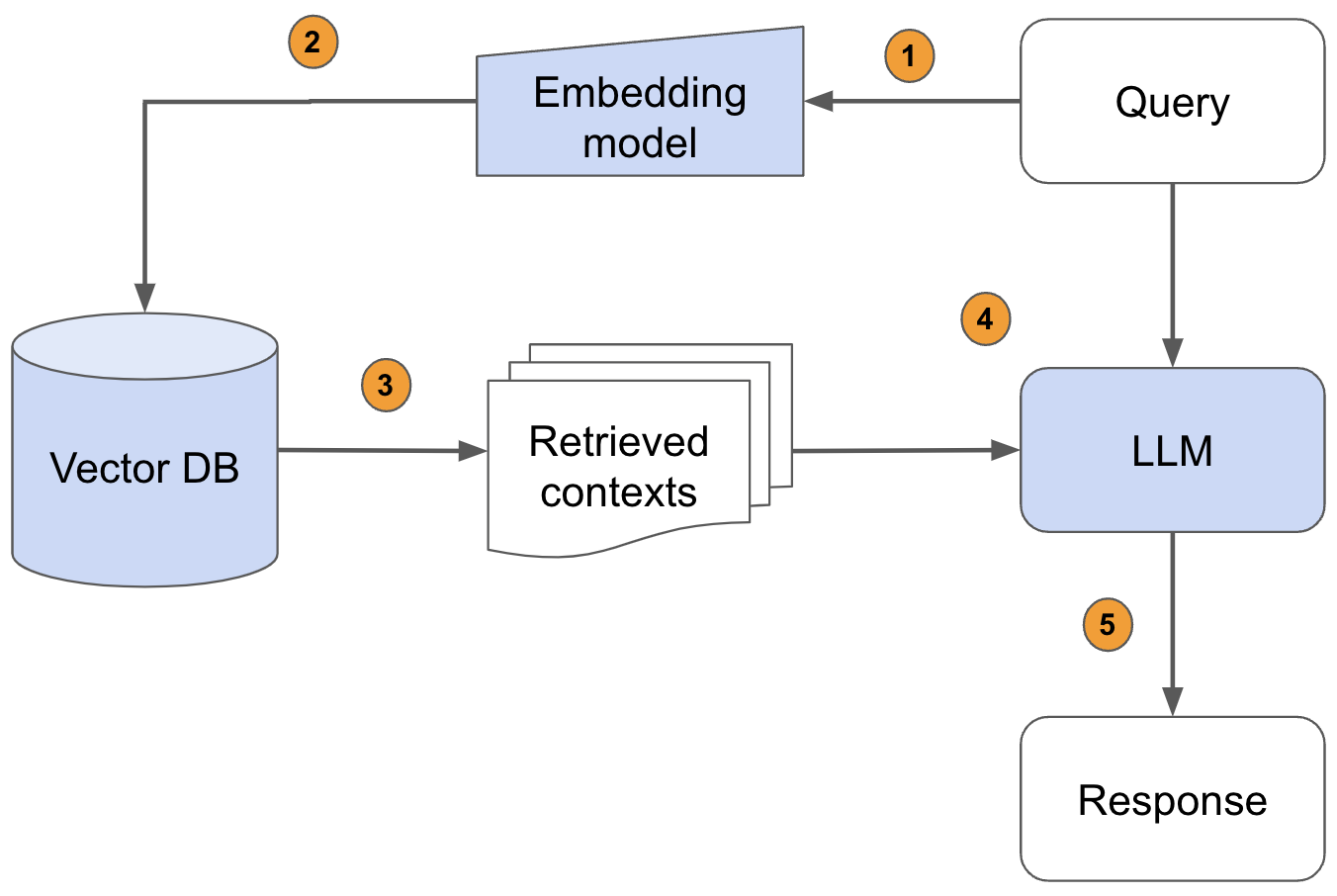

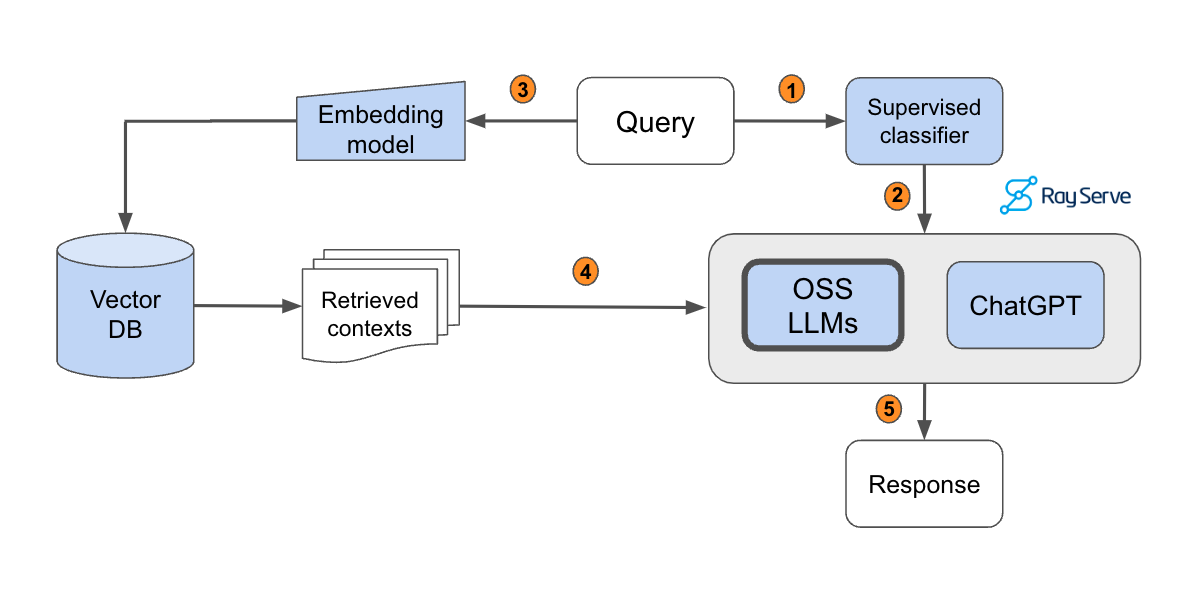

Building RAGbased LLM Applications for Production

Building RAGbased LLM Applications for Production

Build RAG Agents using LLMs Stepbystep Guide by Sree Deekshitha

We Will Build A Simple Rag System Using Llamaindex.

Learn How You Can Deploy An Agent System In Practice And Scale Up Your System To Meet The Demands Of Users And Customers.

Building Rag Agents Using Llms Is A Challenging Yet Rewarding Process That Requires Technical Skills And Knowledge In Machine Learning And Nlp.

It Allows Developers To Define And Configure Multiple Agents, Each With.

Related Post: